Paper Reviews on Energy-Based Models for Learning and Reasoning

Review of Two Papers on Energy-Based Models (EBMs)

- Paper 1: Energy-Based Transformers are Scalable Learners and Thinkers

- Paper 2: Learning Iterative Reasoning through Energy Minimization

1. Energy-Based Transformers (EBTs)

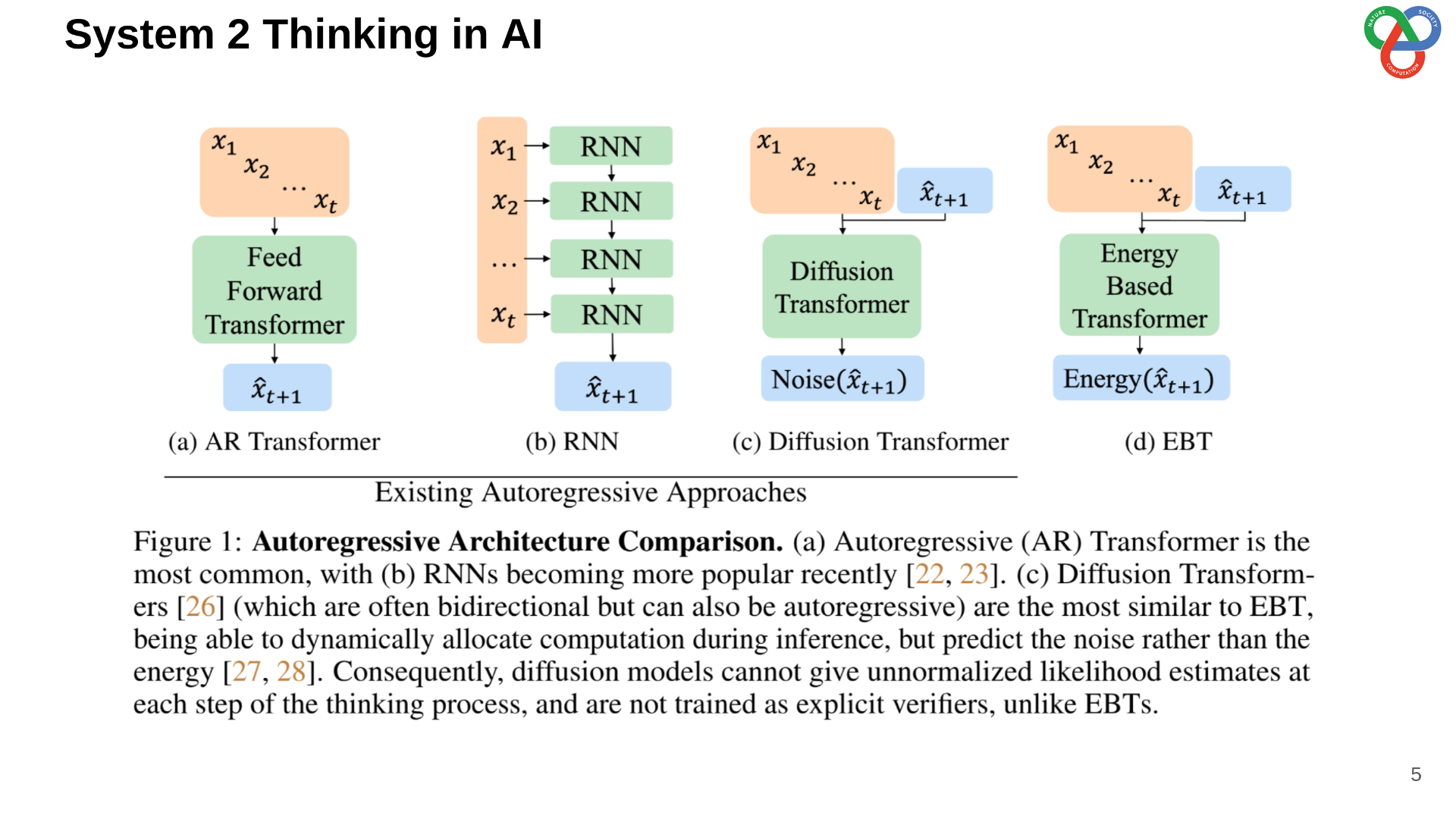

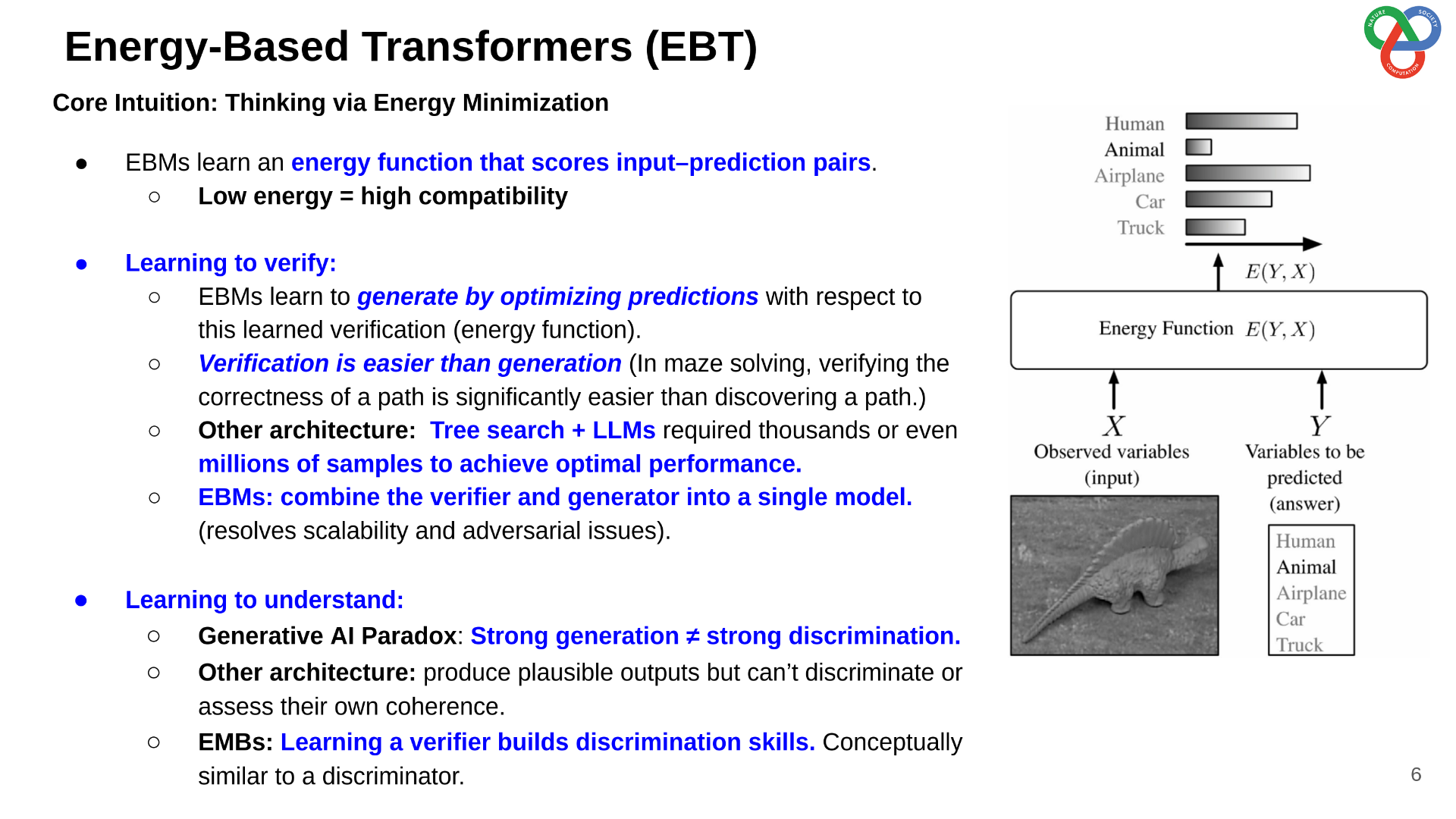

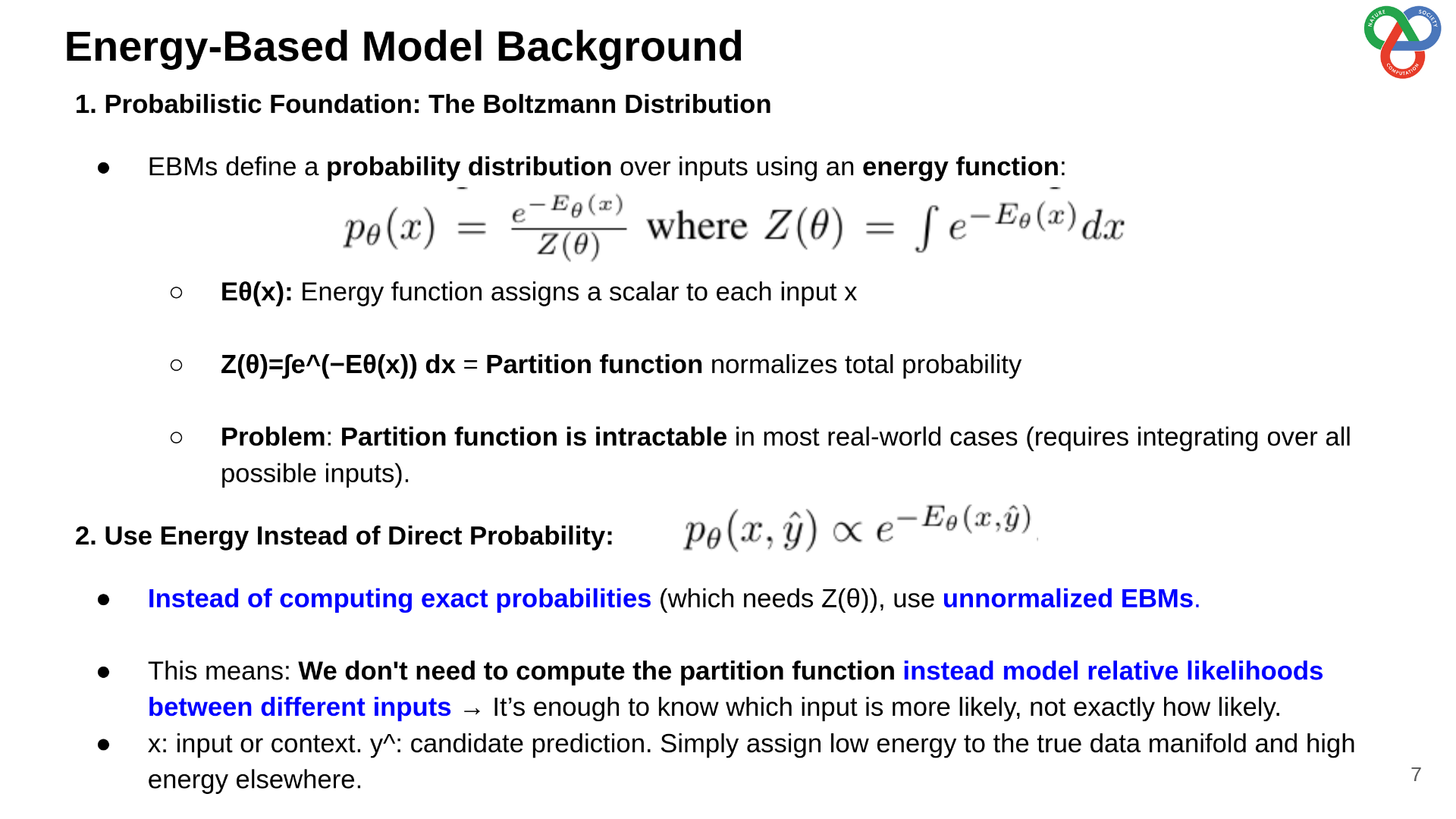

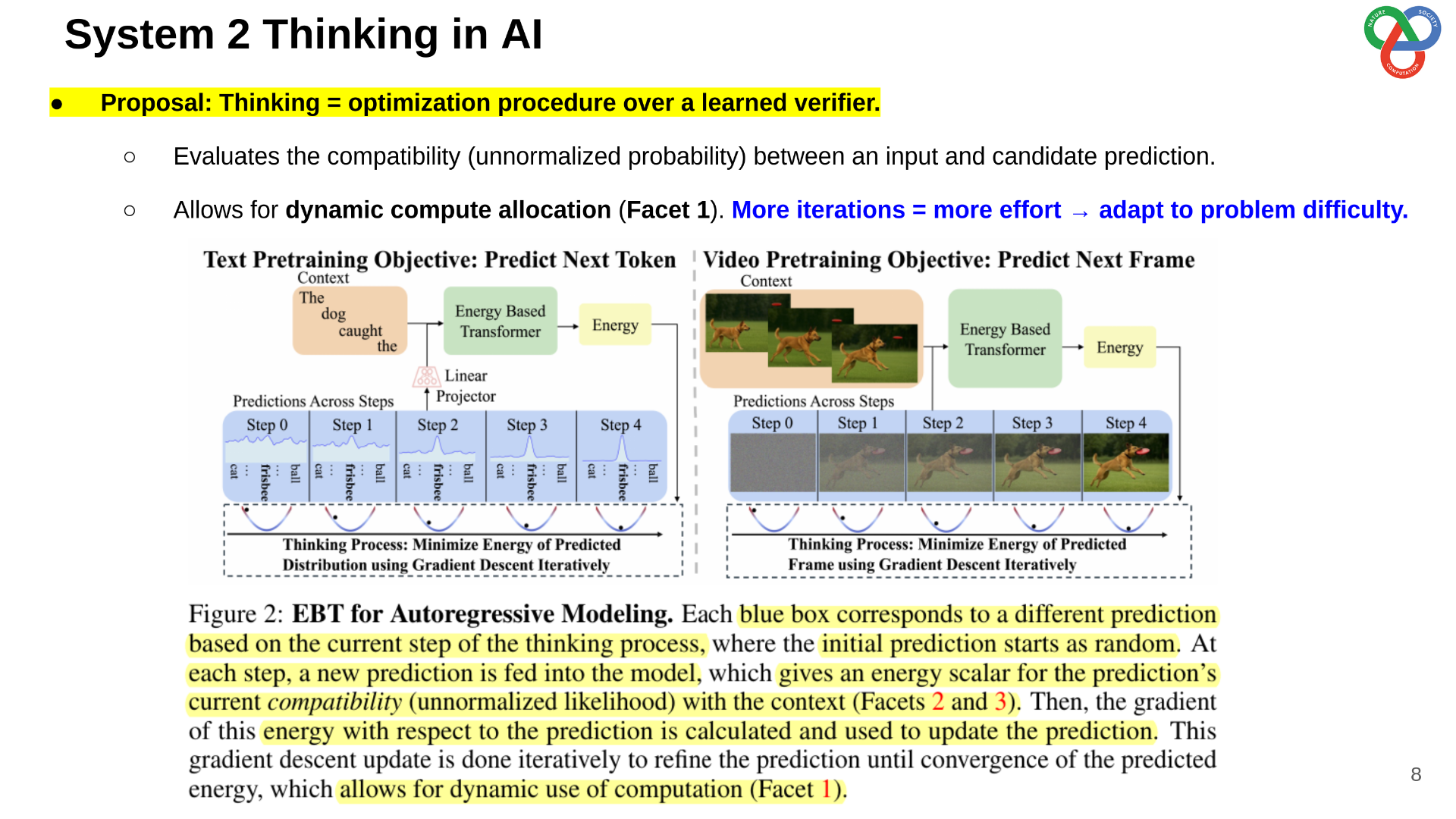

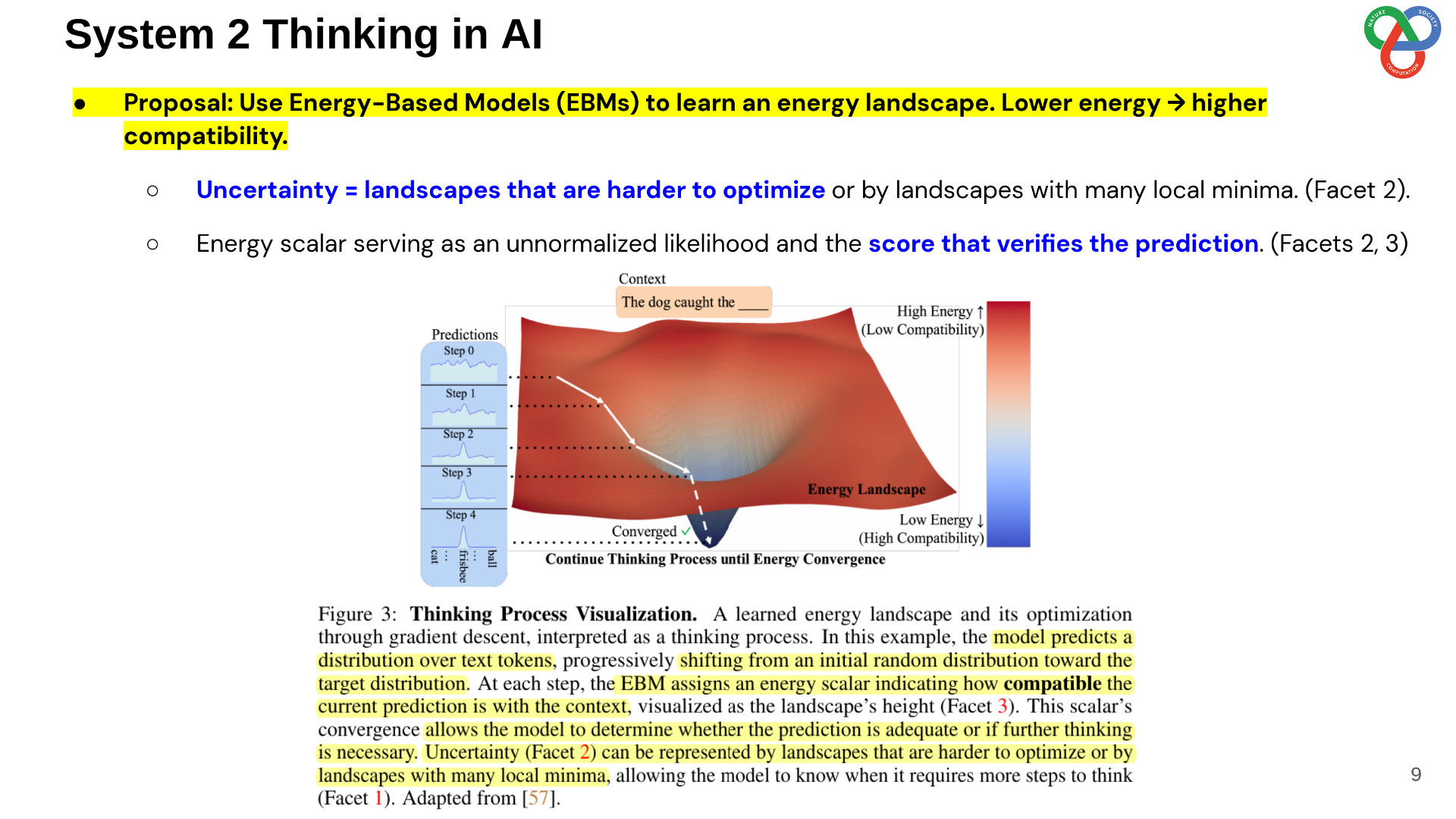

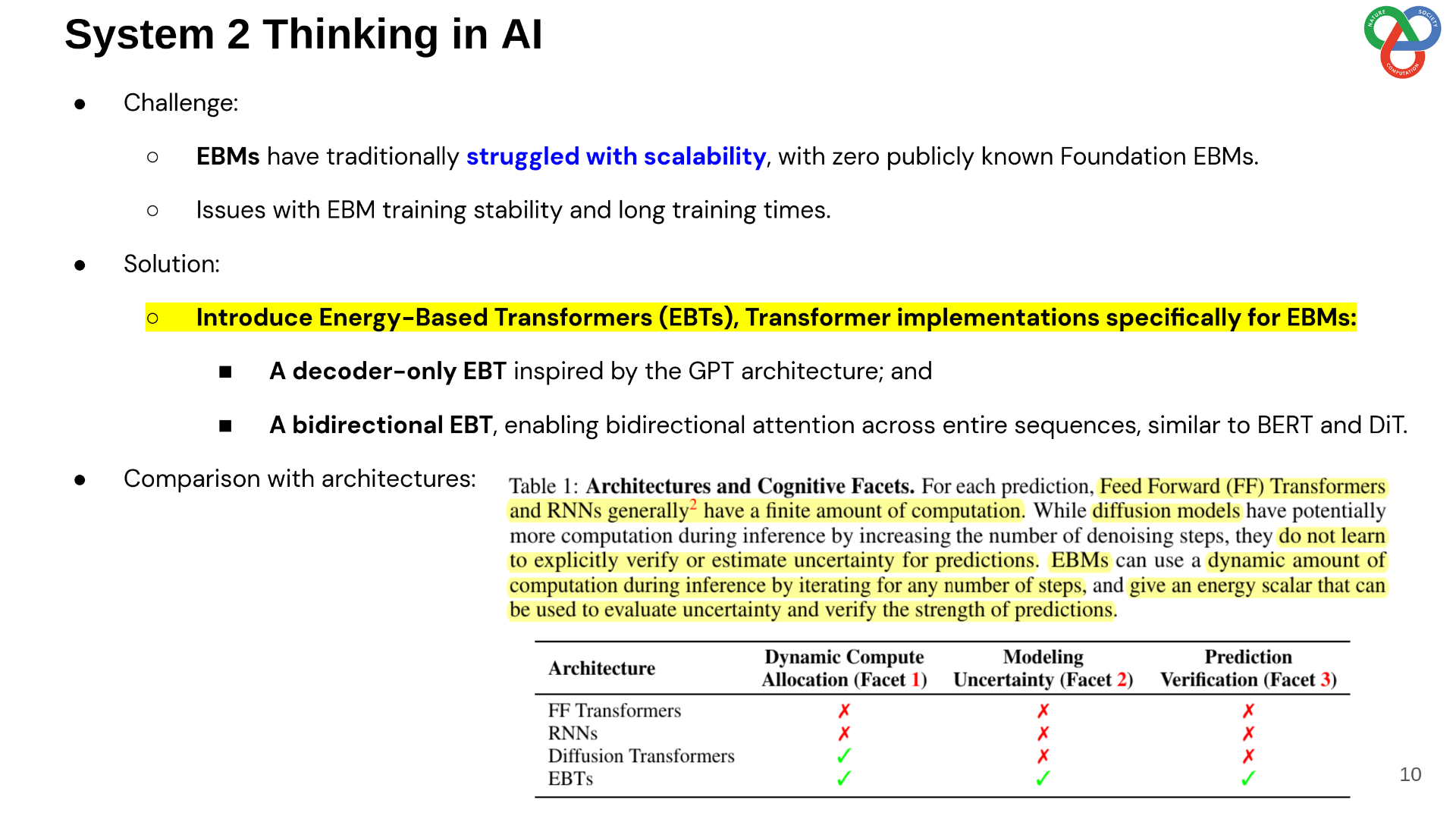

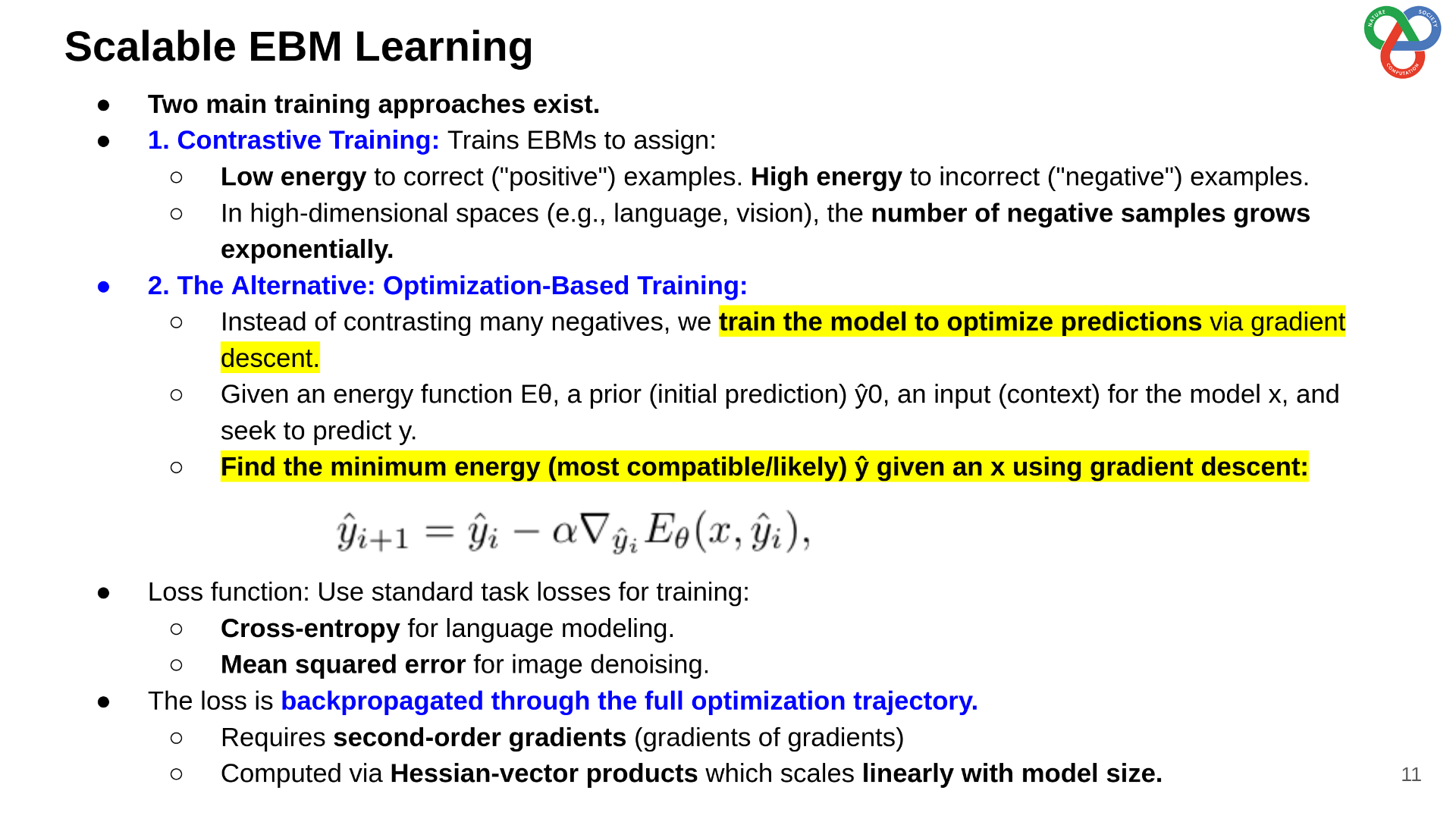

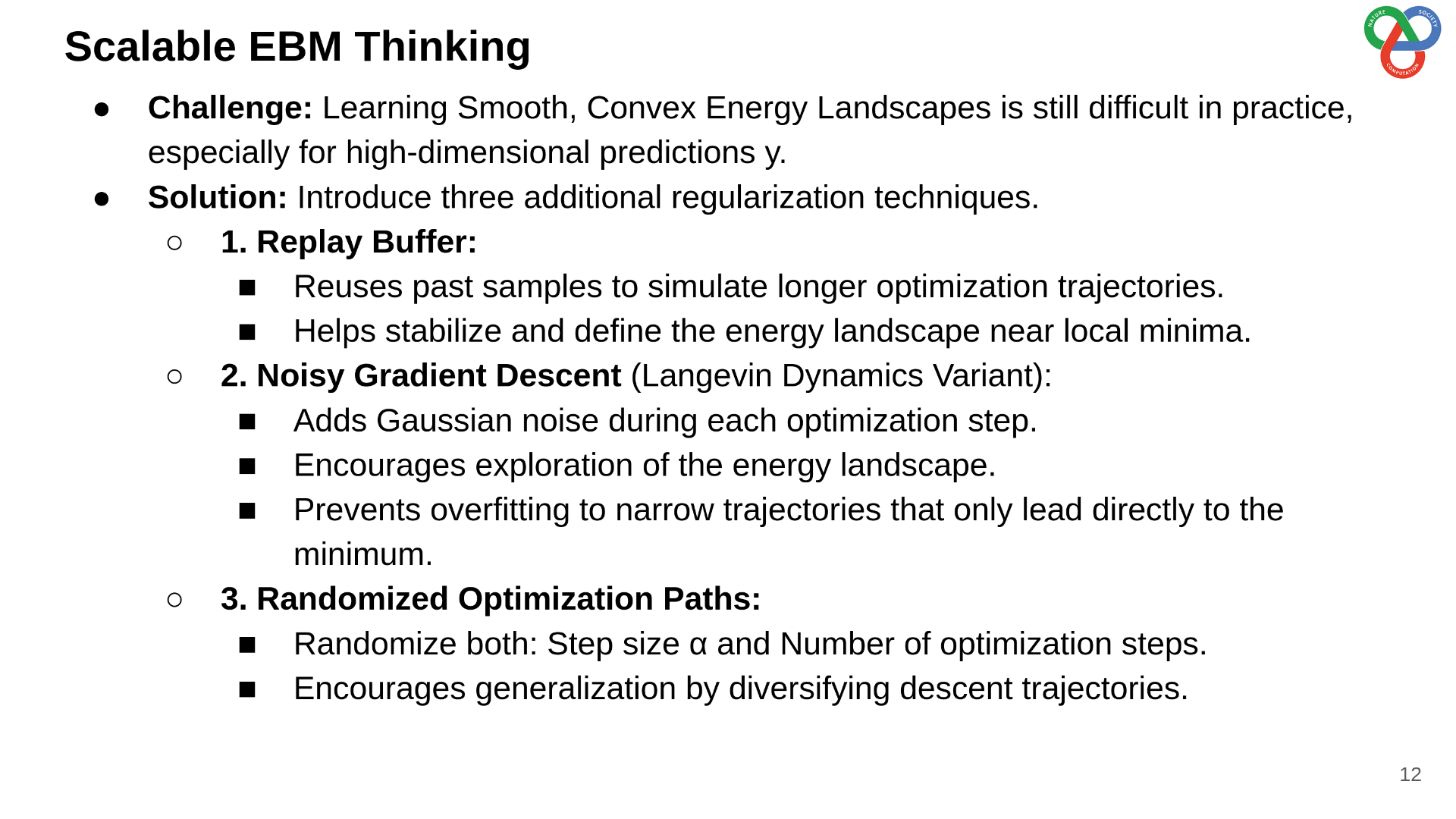

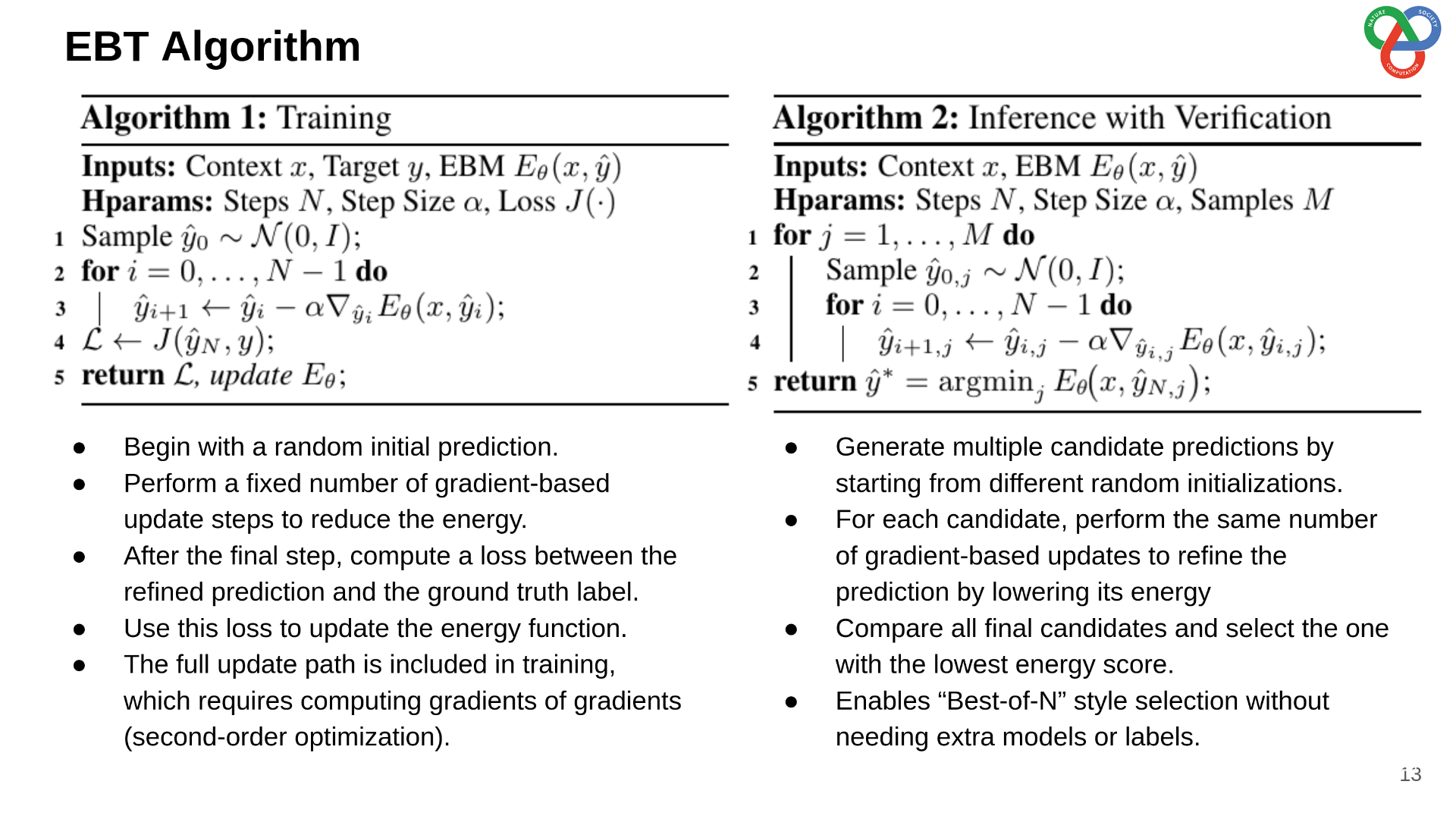

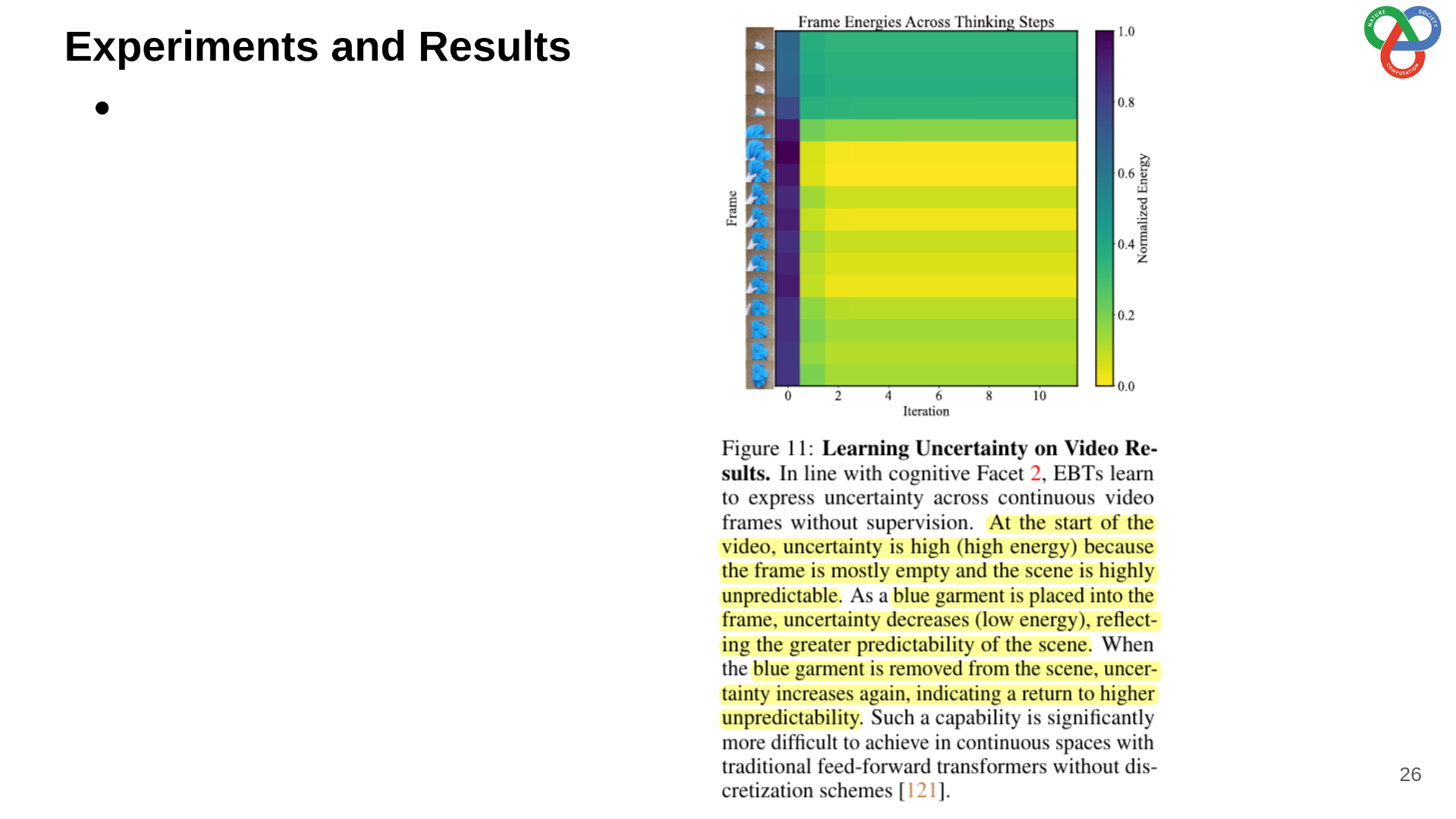

This work introduces Energy-Based Transformers (EBTs), a new class of EBMs that assign energy values to input–prediction pairs. Predictions are obtained through gradient descent-based energy minimization, reframing inference as an optimization process.

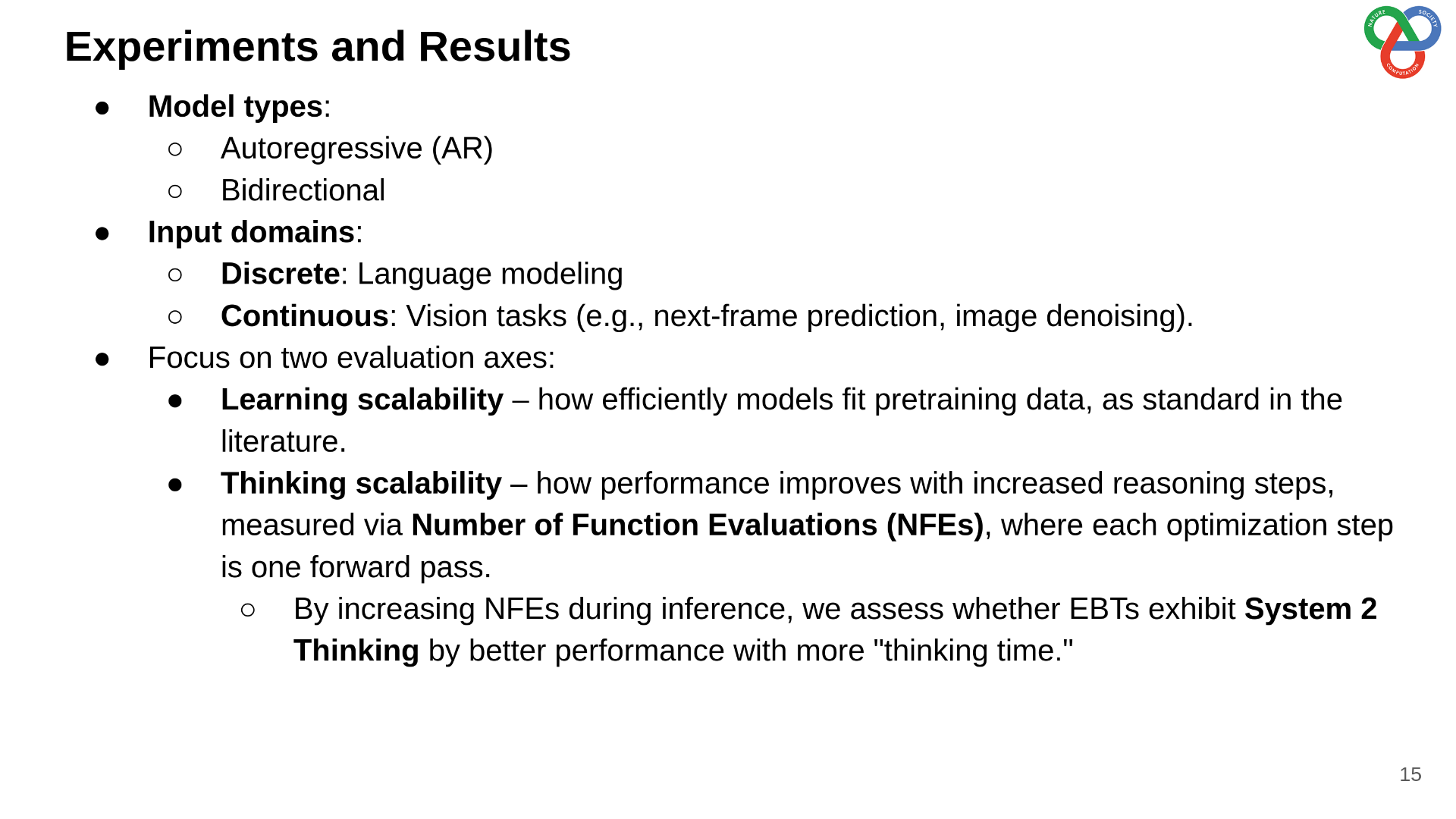

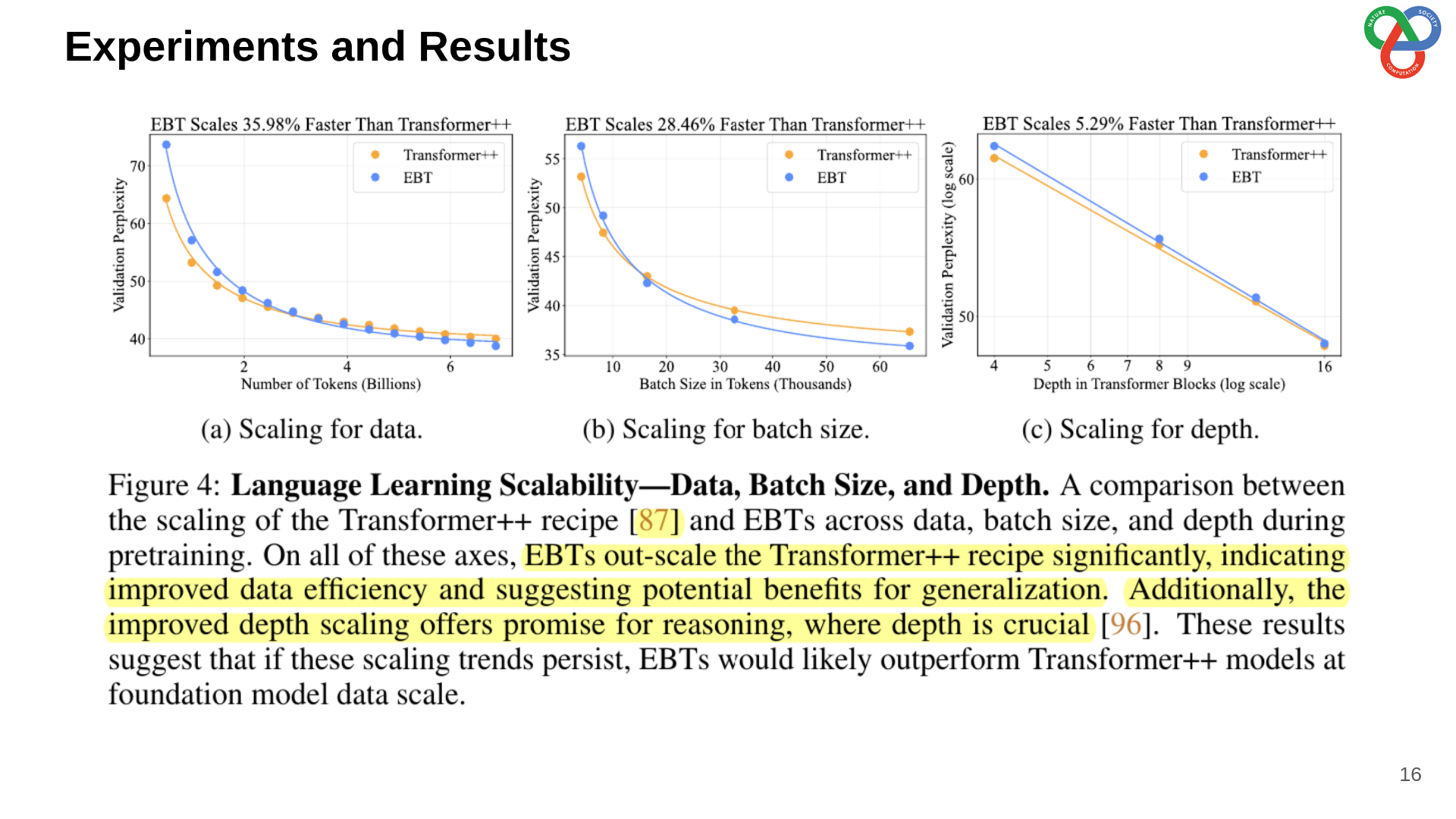

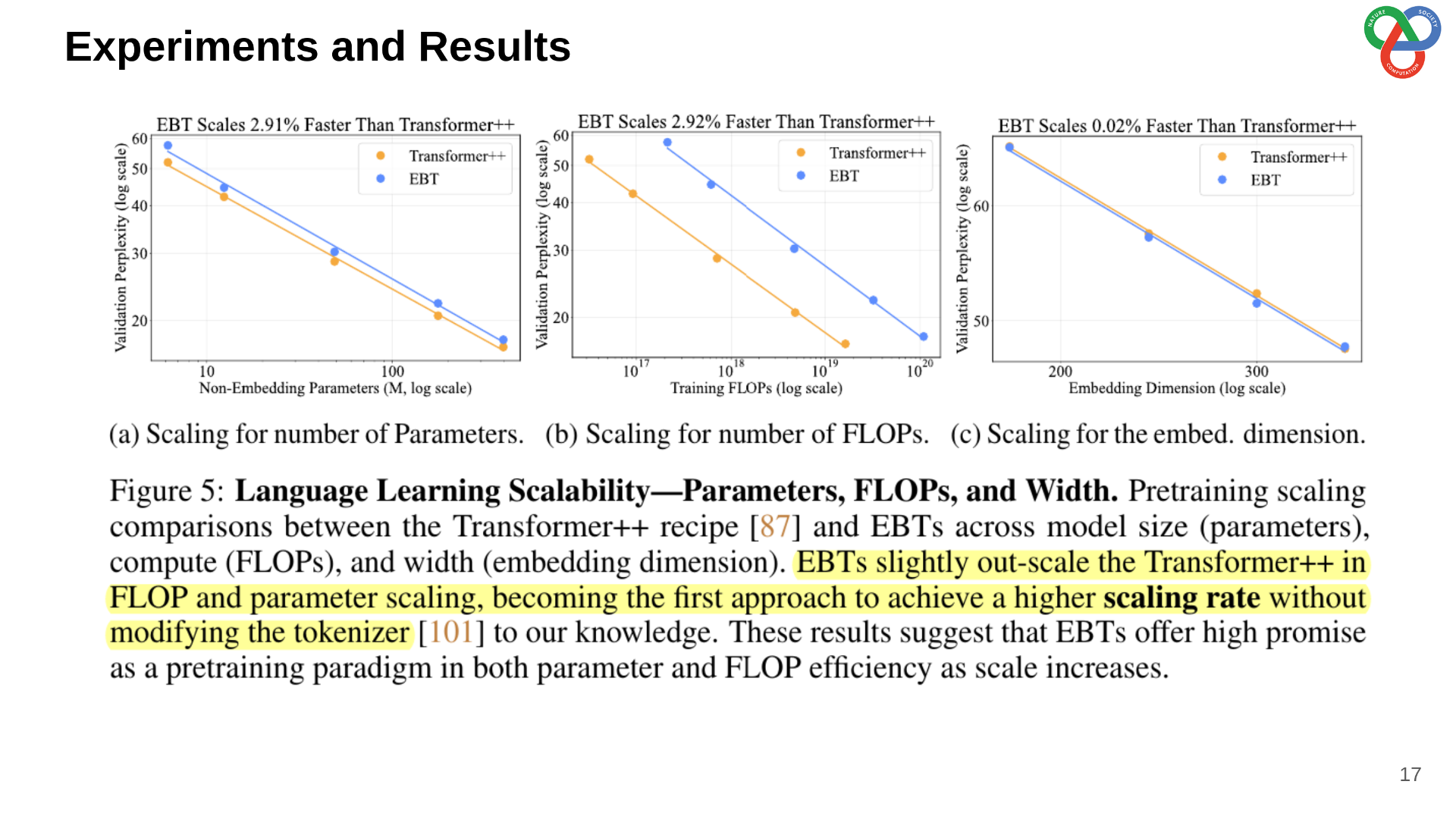

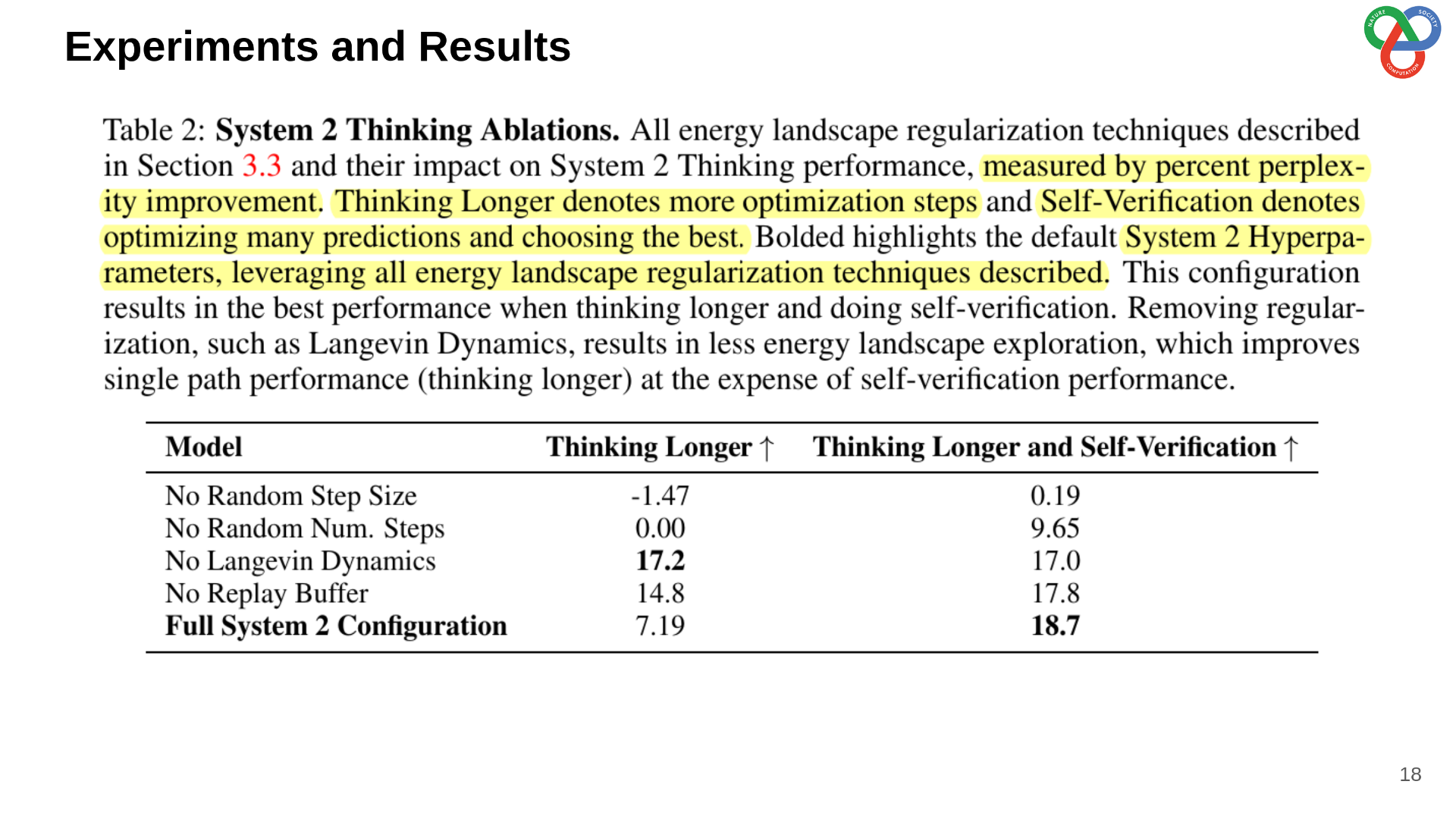

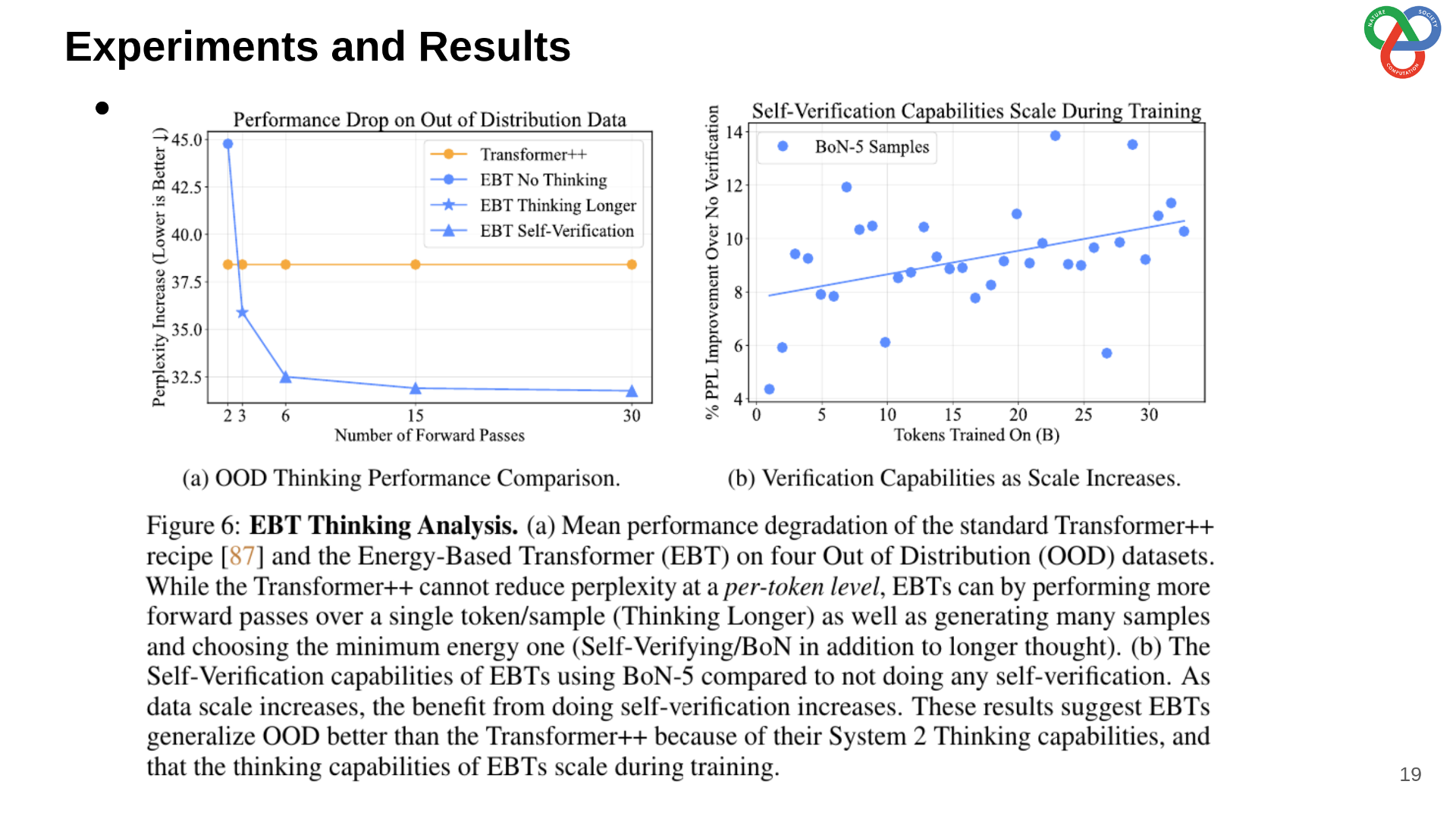

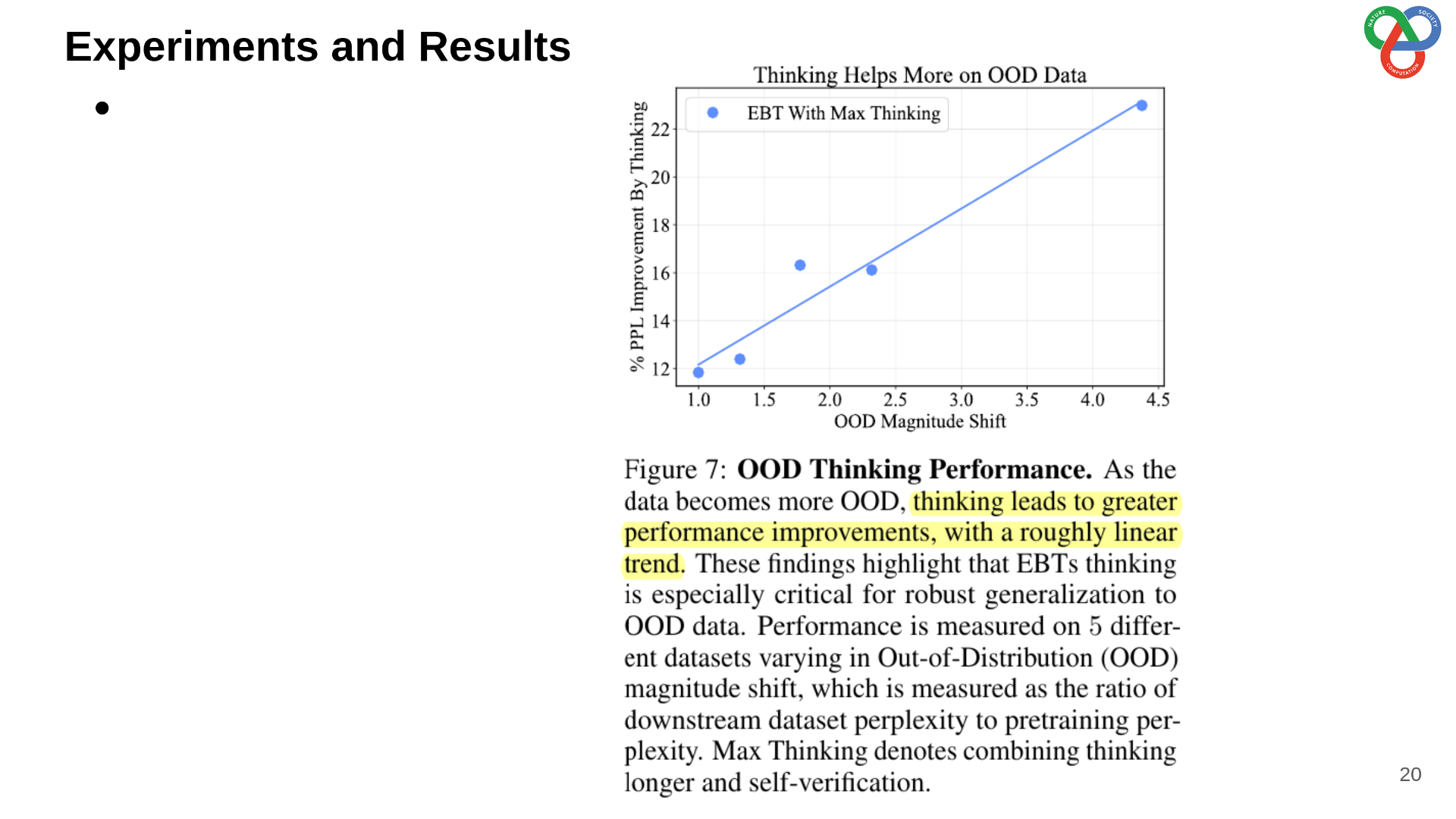

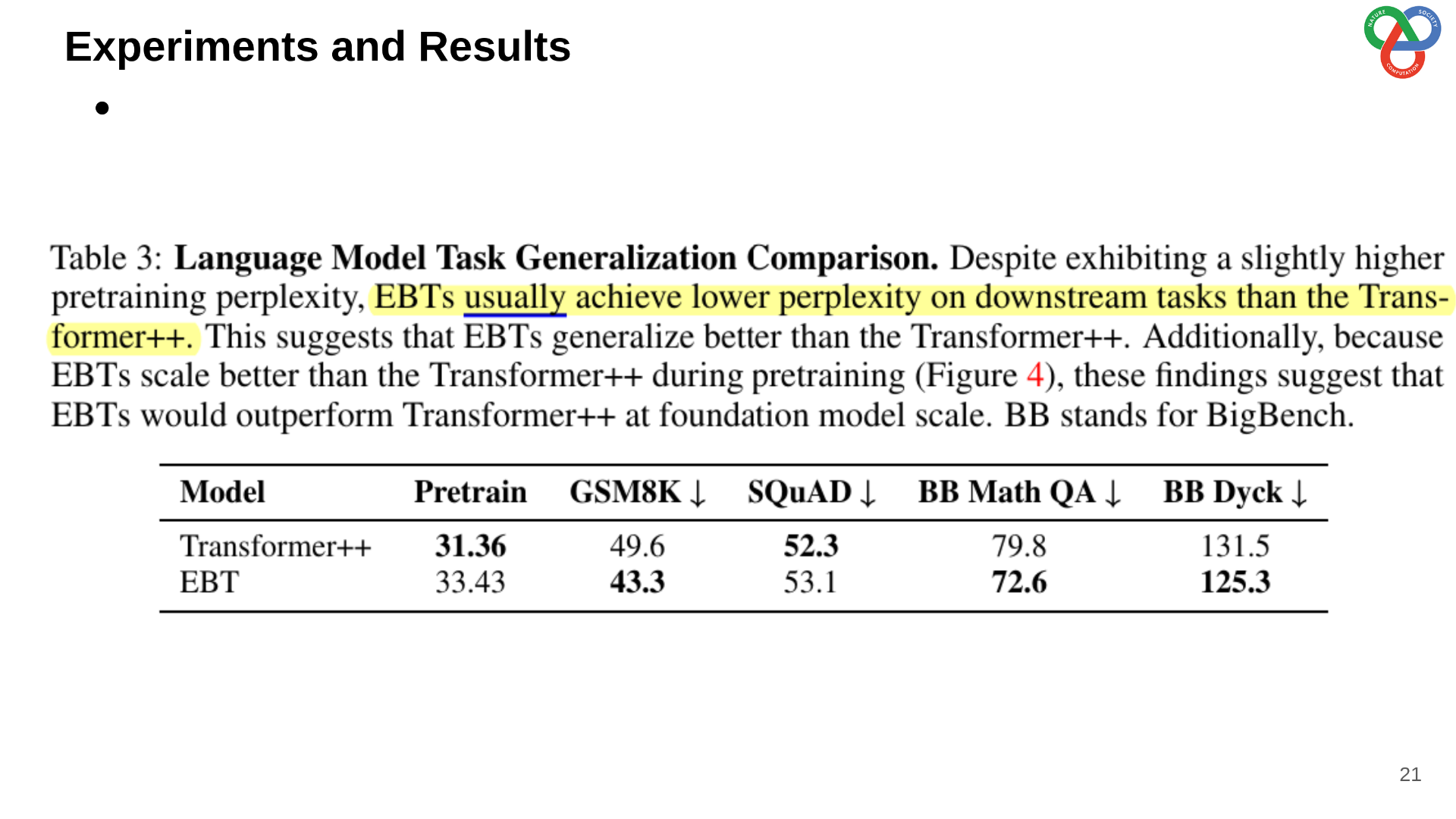

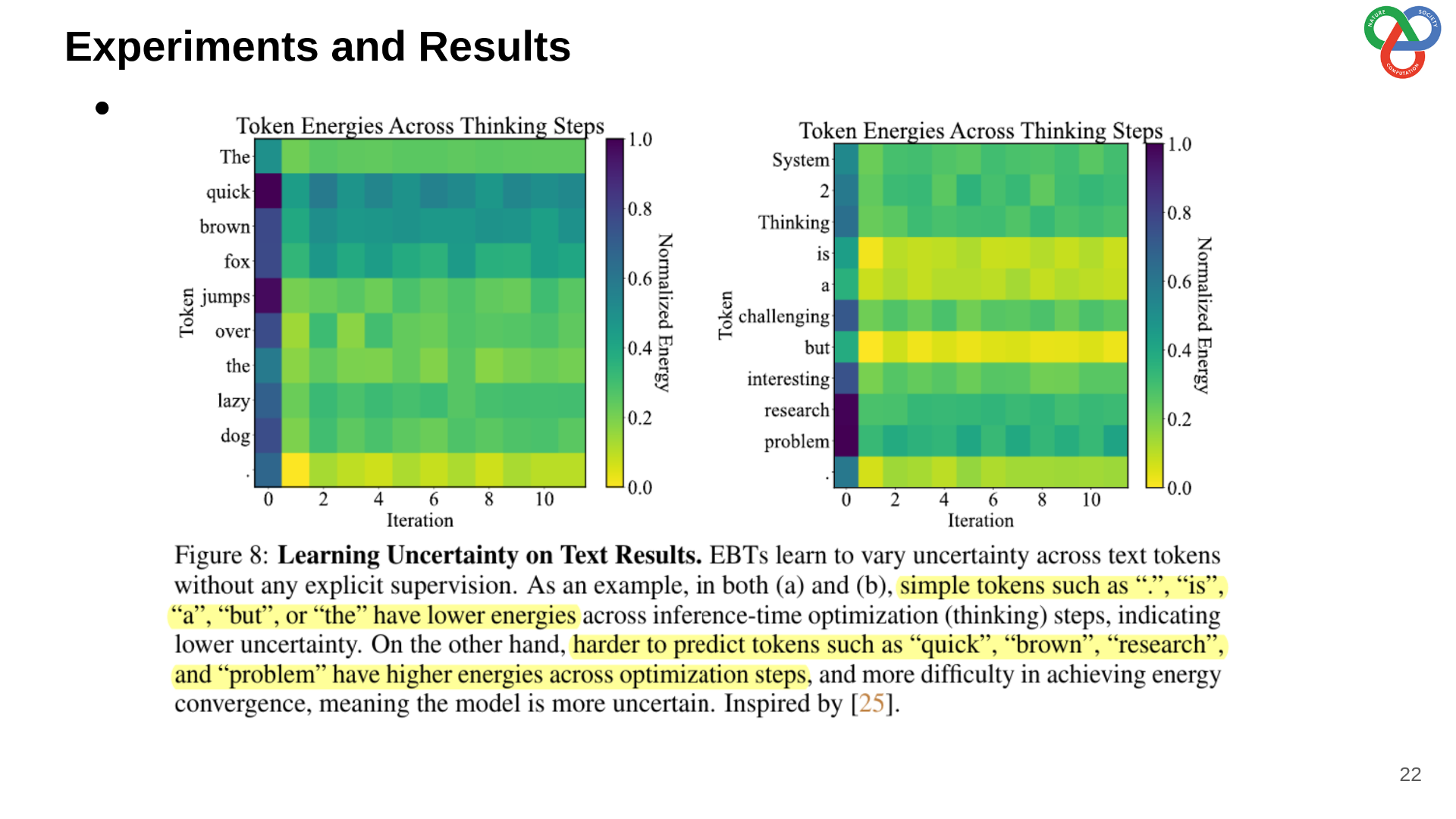

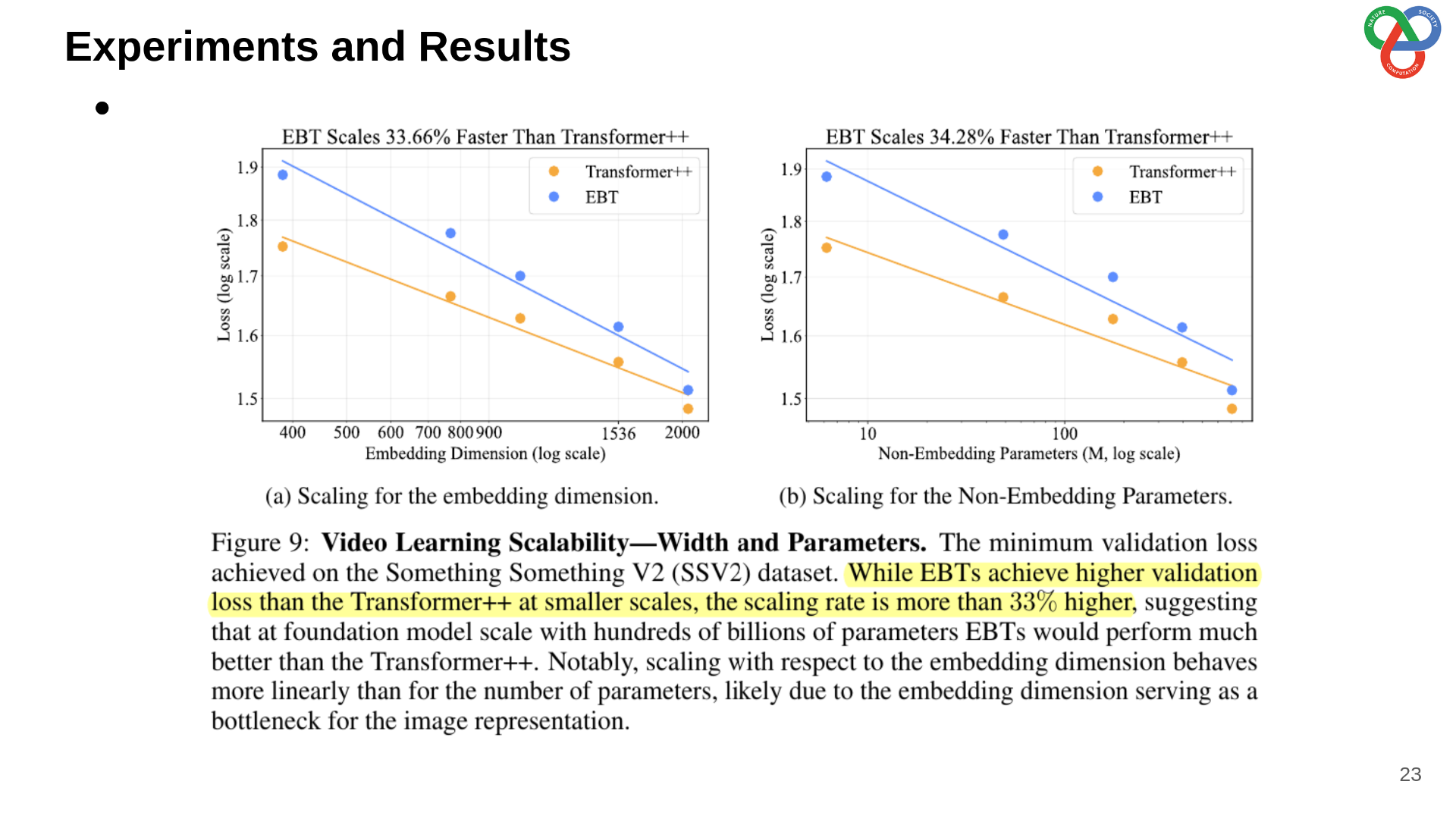

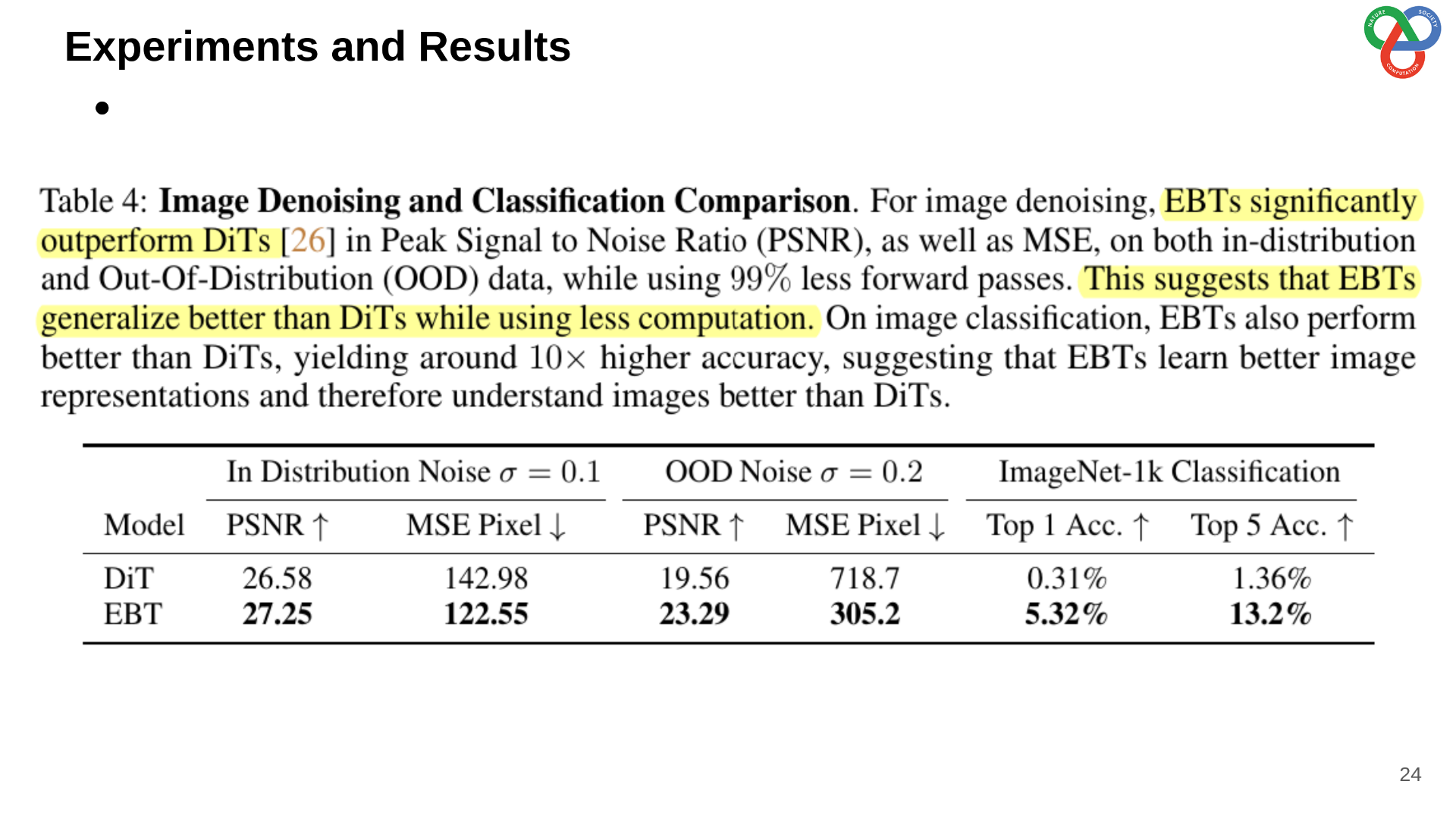

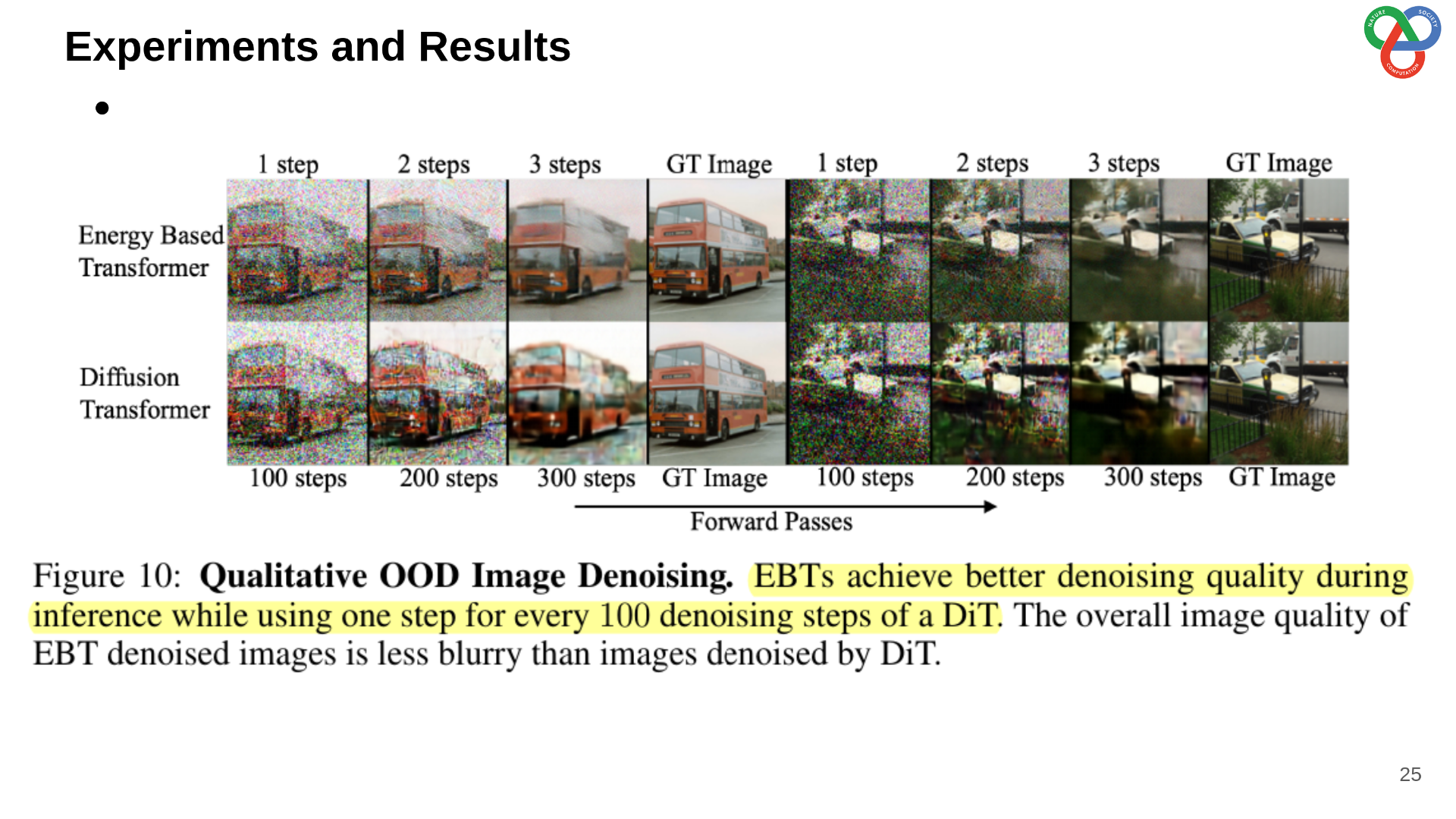

EBTs scale efficiently across both discrete (text) and continuous (vision) modalities. Compared to standard Transformer++ architectures, they achieve faster scaling (up to 35%) with respect to parameters, data, and compute, and deliver improved inference-time reasoning. On language tasks, EBTs enhance performance by 29% under a System 2 Thinking setup. On vision, they outperform Diffusion Transformers on image denoising while requiring fewer forward passes.

Overall, EBTs present a general, unsupervised framework for System 2-style reasoning, offering stronger generalization across downstream tasks compared to conventional approaches.

2. Learning Iterative Reasoning through Energy Minimization

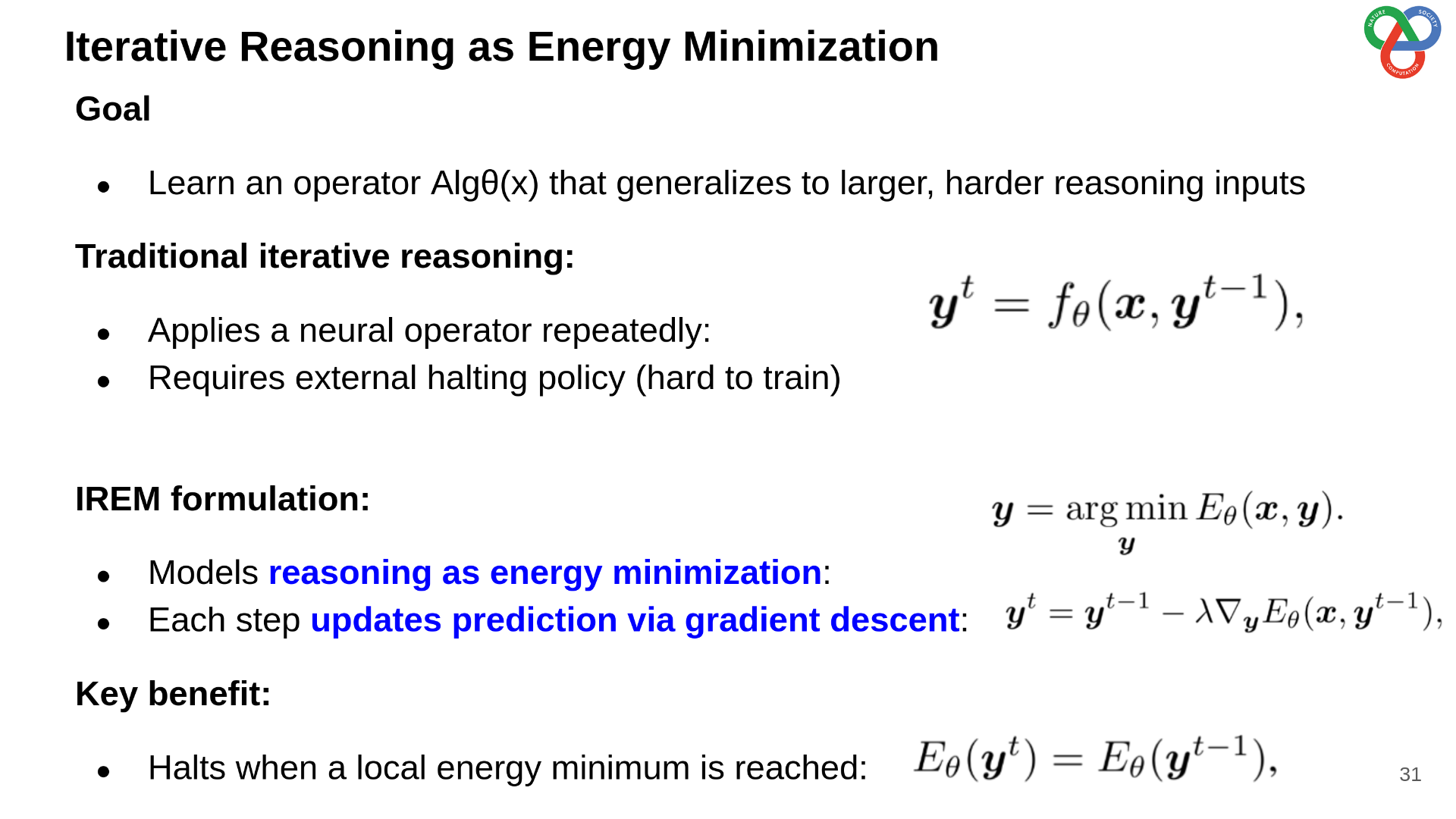

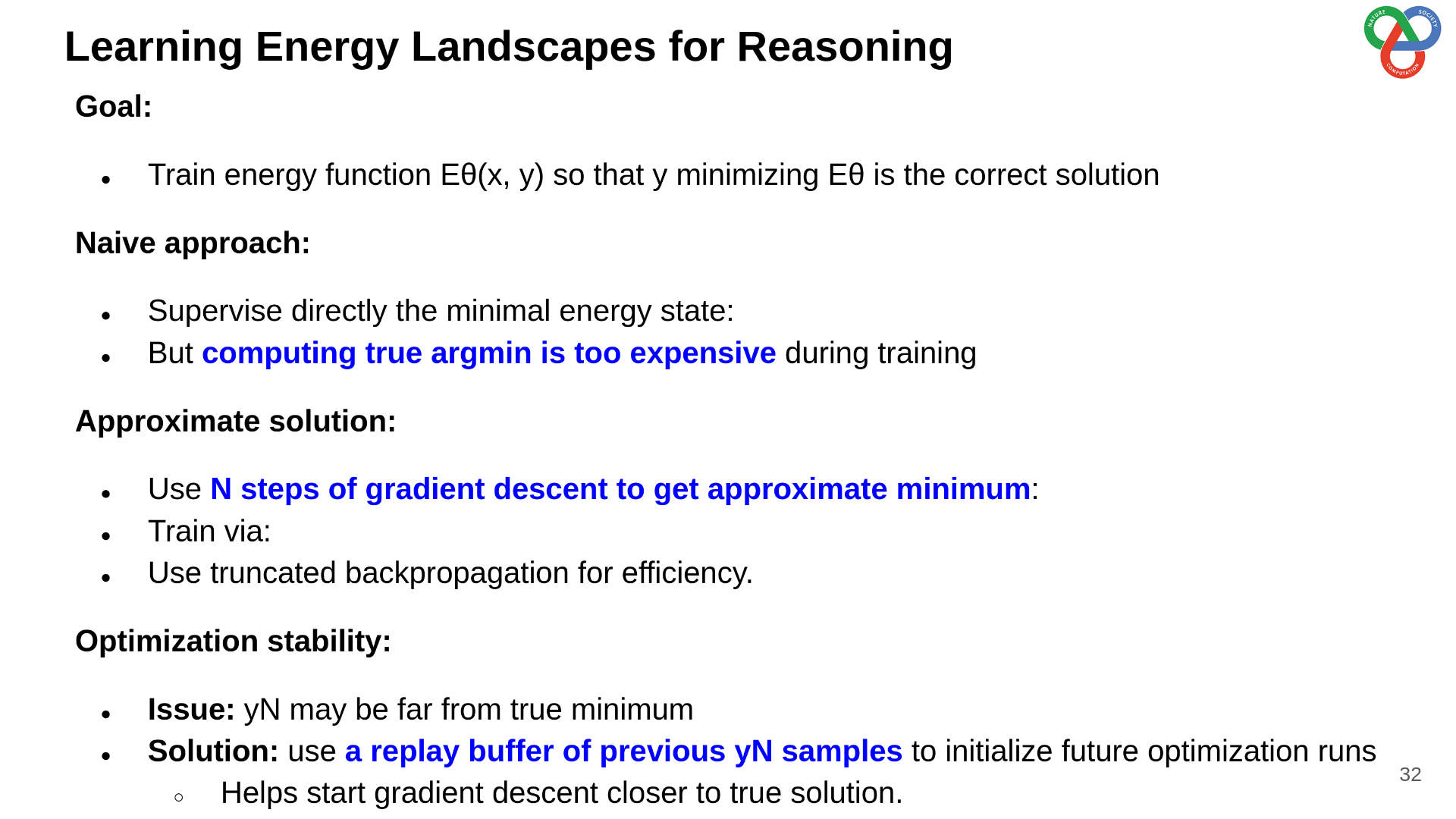

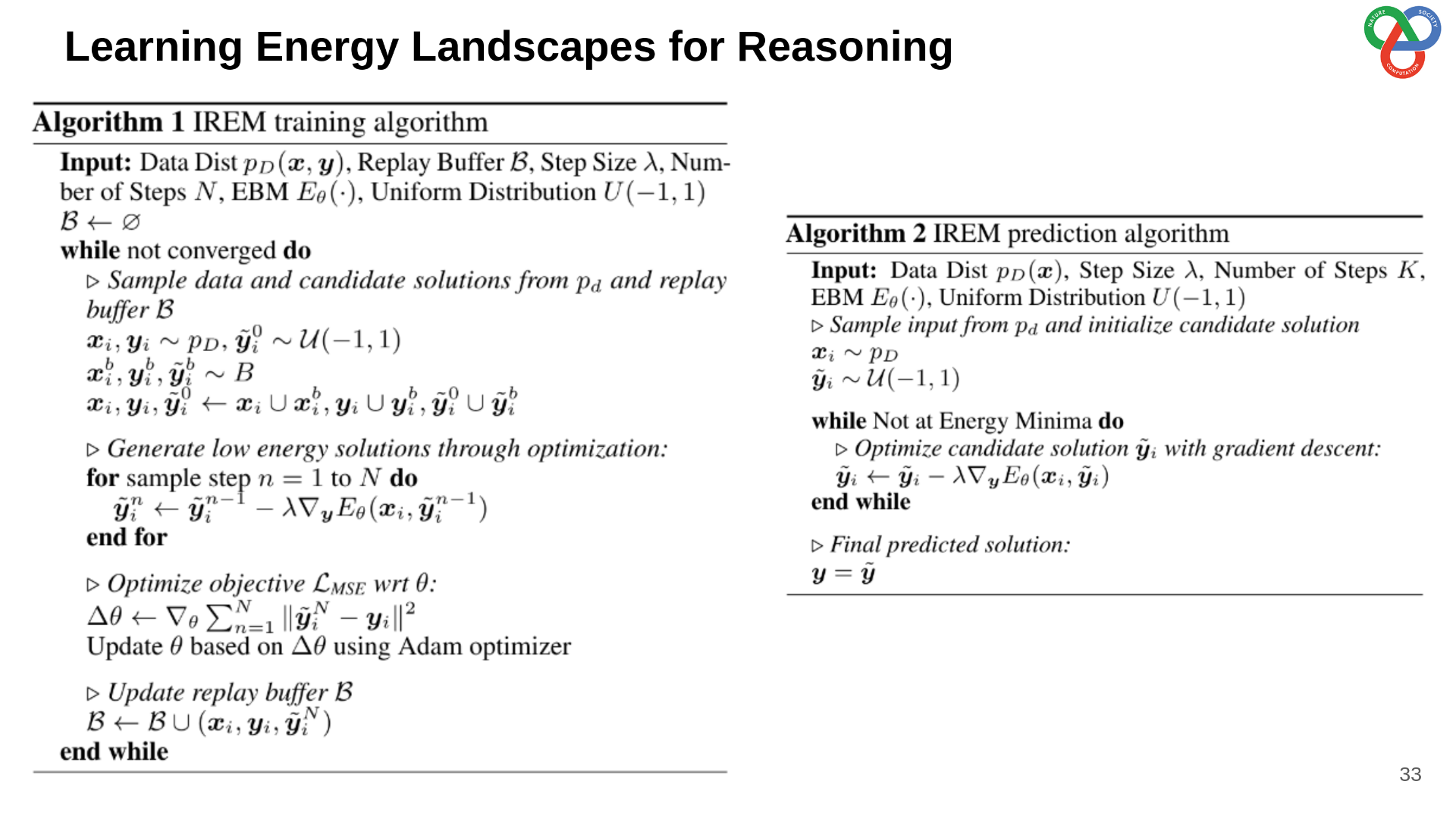

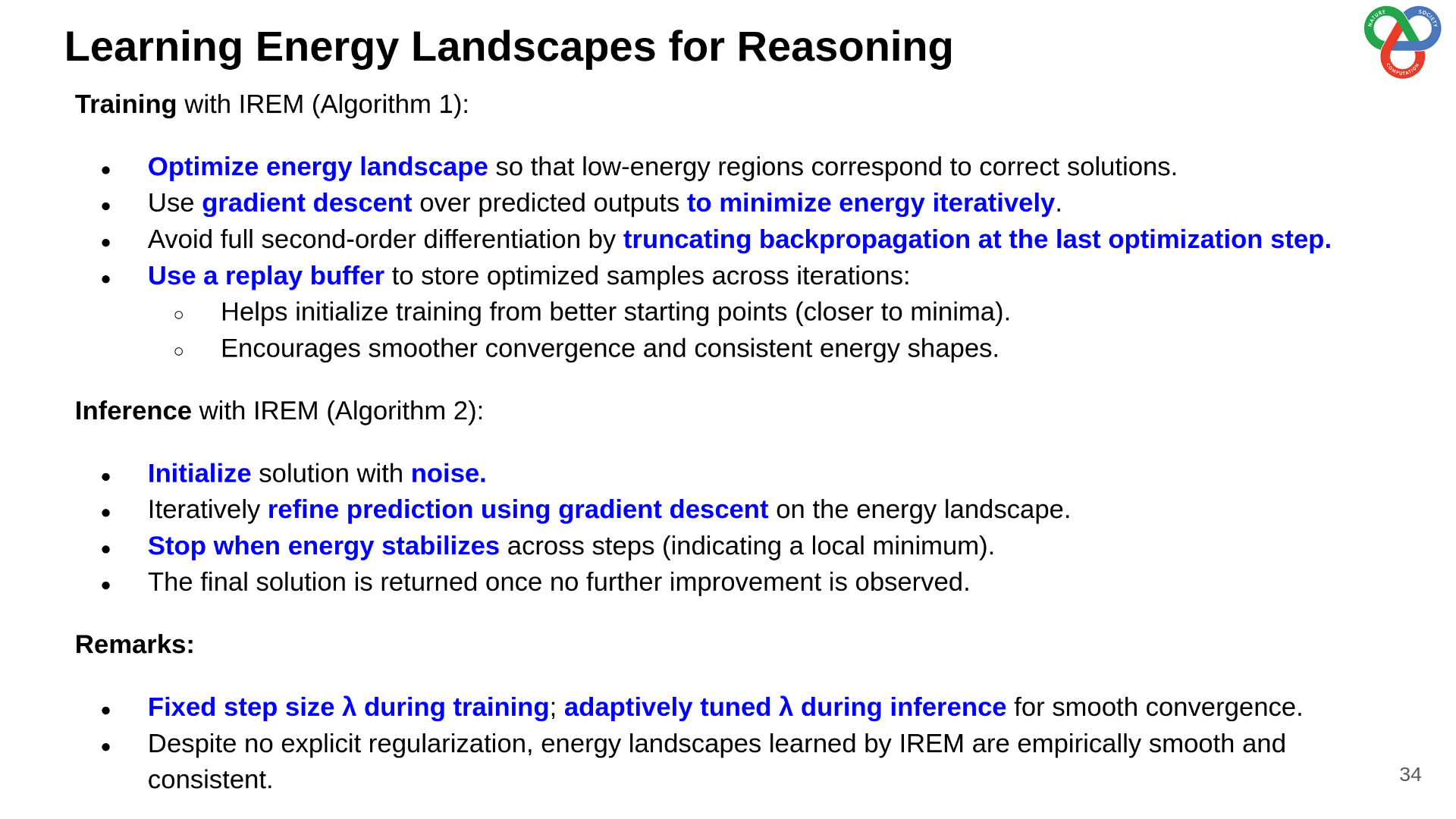

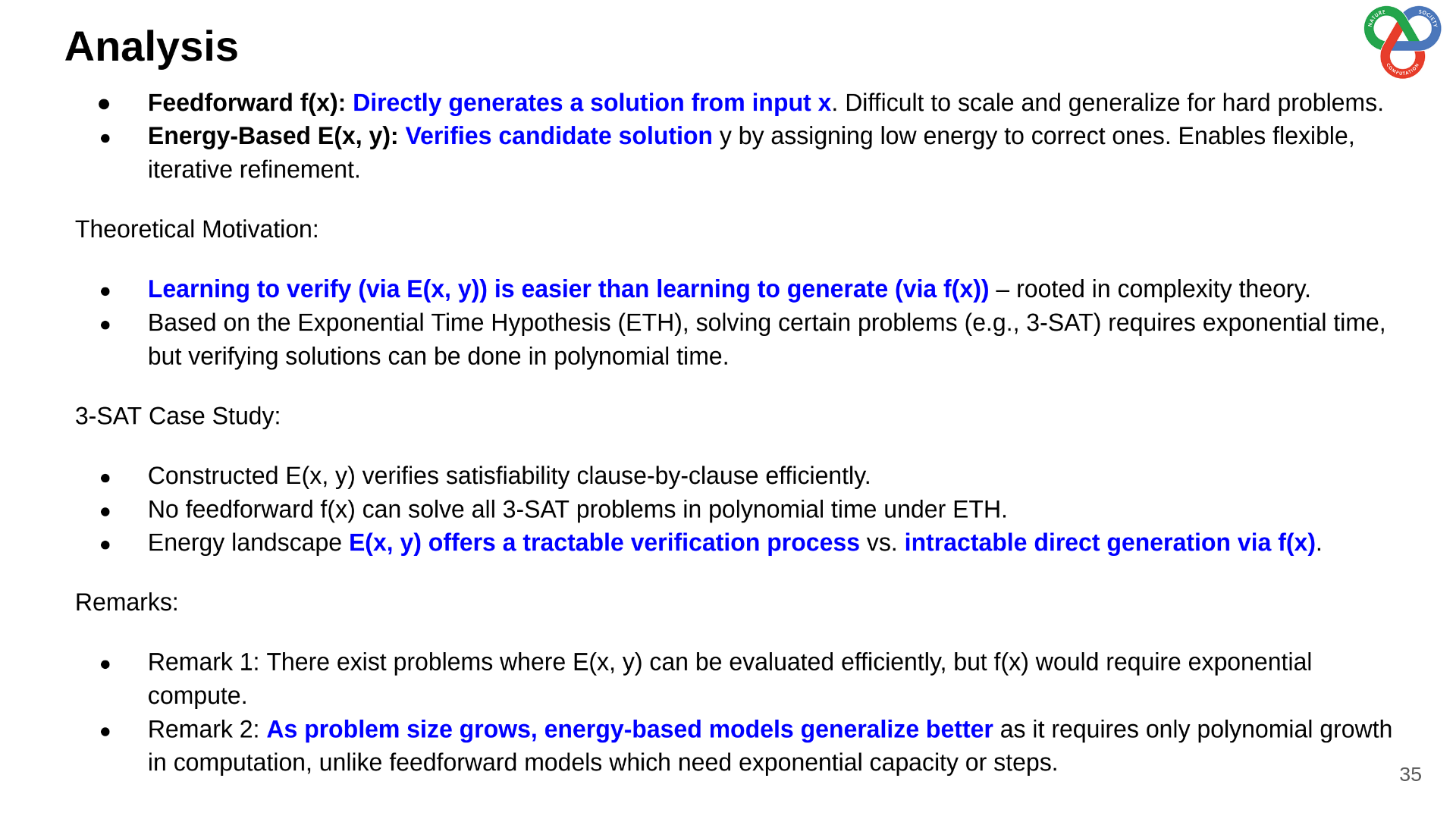

This earlier work proposes a framework where reasoning is formulated as energy minimization. A neural network parameterizes an energy landscape over candidate outputs, and reasoning proceeds iteratively by descending this landscape toward low-energy solutions.

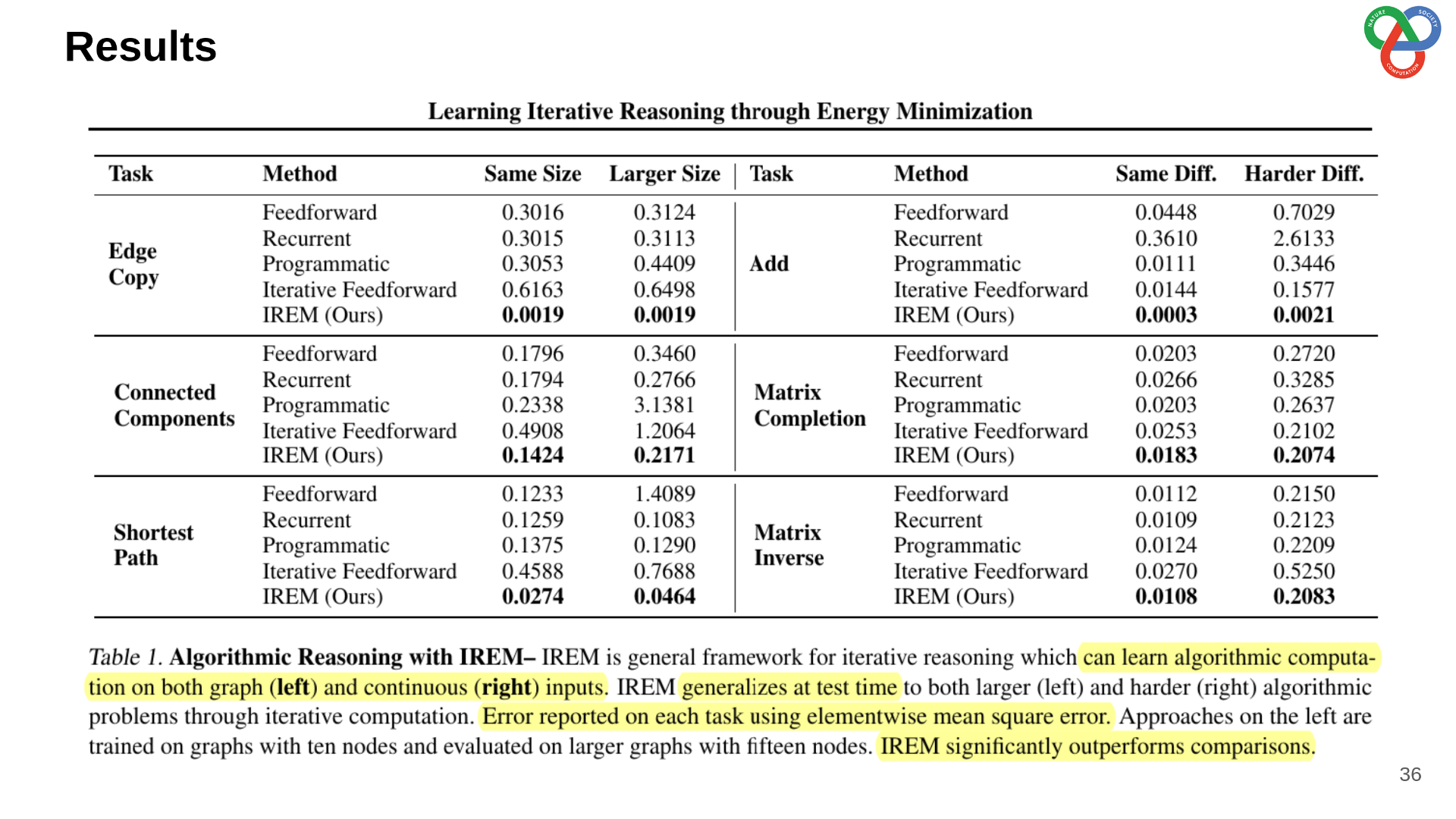

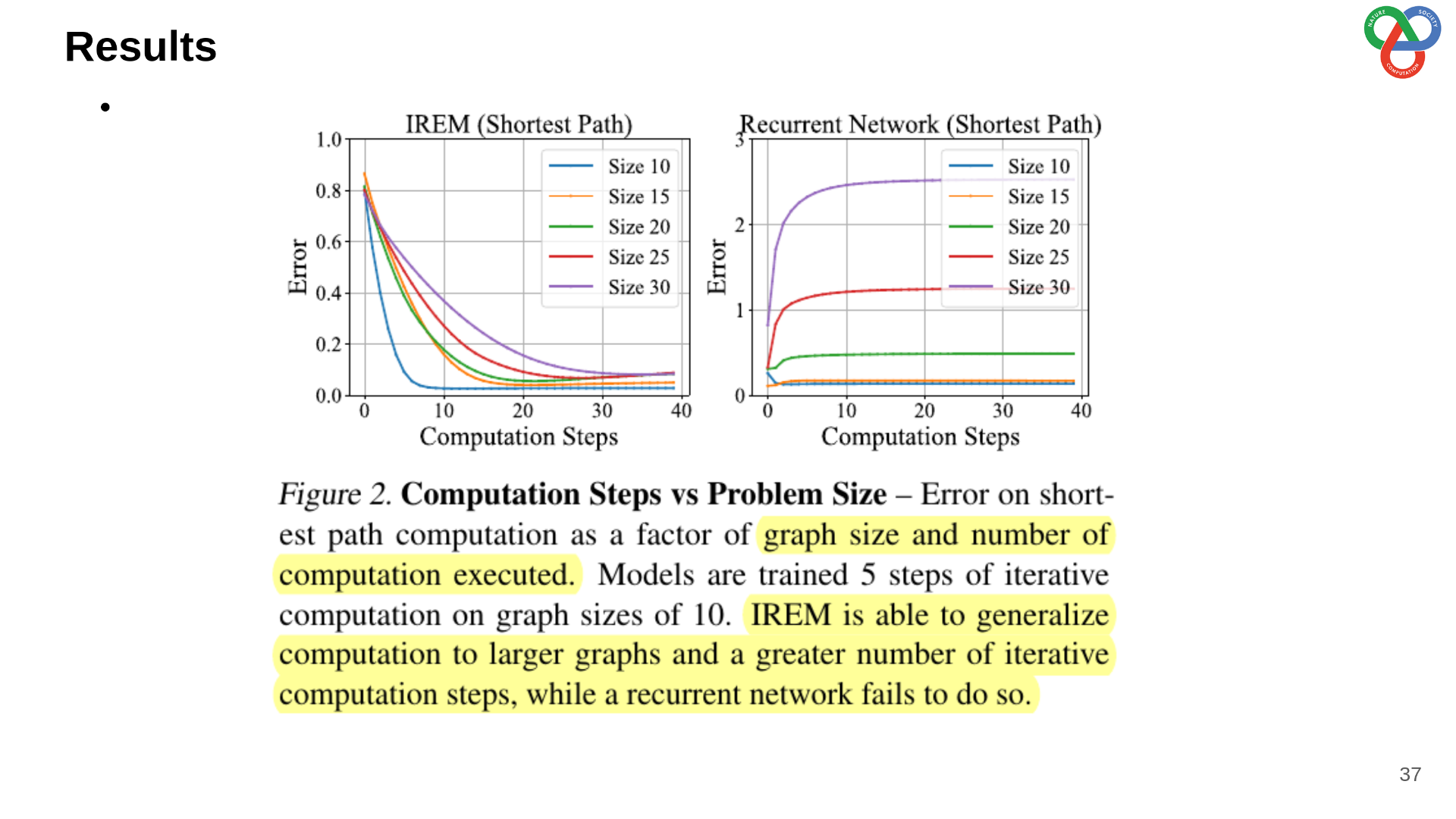

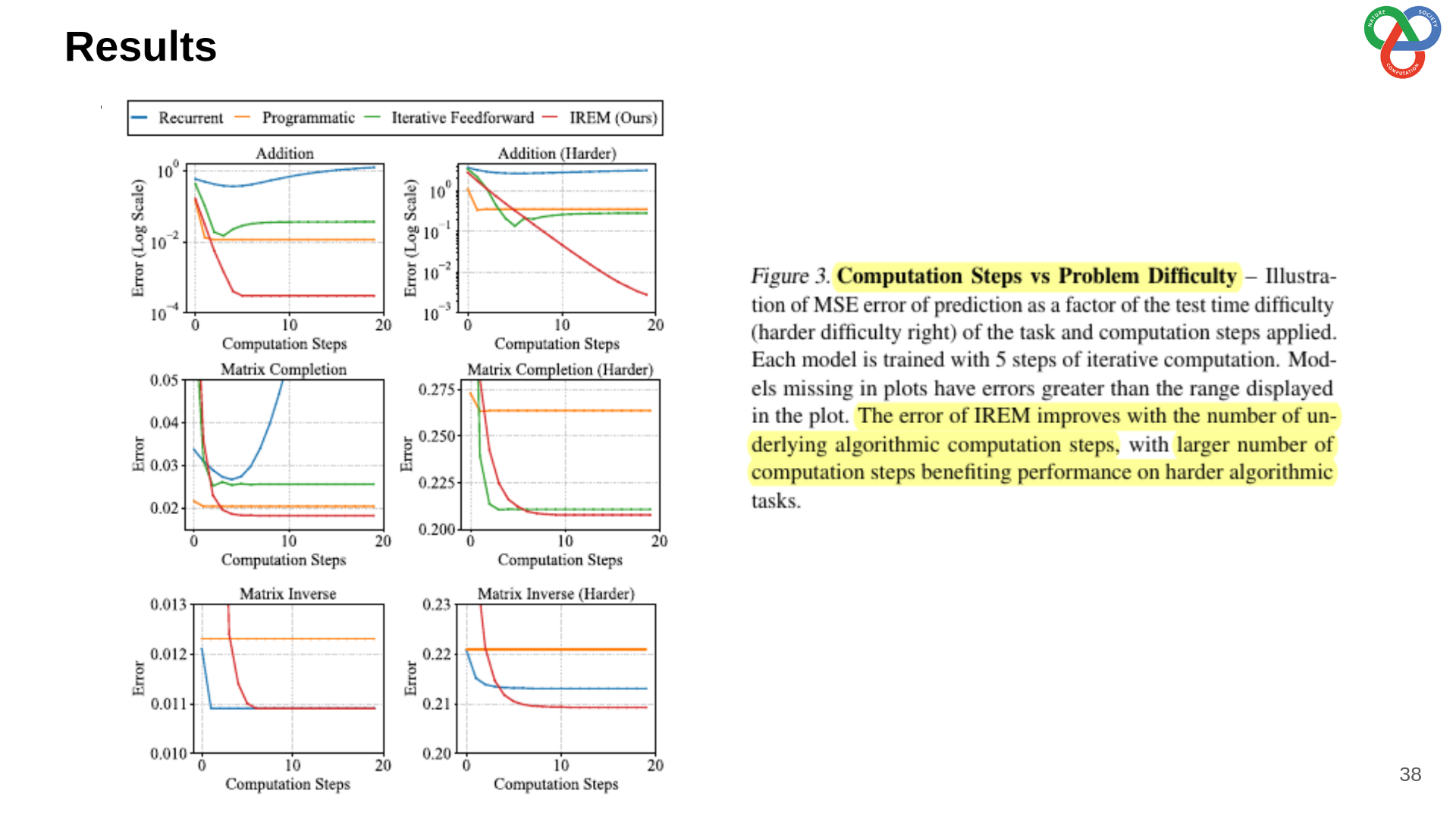

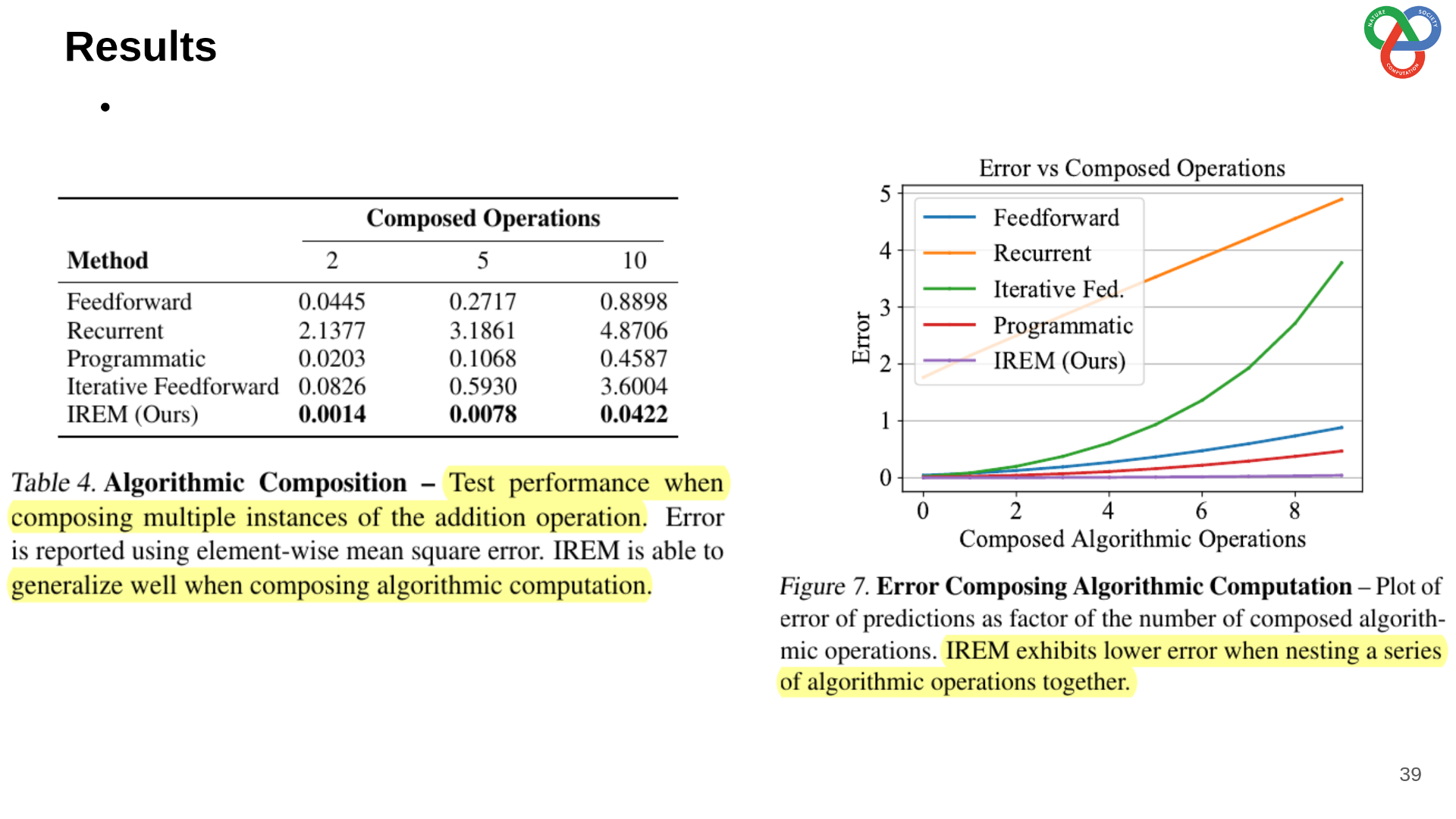

The key insight is that harder problems correspond to more complex energy landscapes. By allocating more optimization steps to these cases, the system effectively performs adaptive computation, mirroring how humans “think longer” on more difficult problems. Empirical results show improved performance on algorithmic reasoning tasks in both graph-based and continuous domains, including problems requiring nested reasoning.

My Notes

These two works illustrate the potential of energy-based models as reasoning engines:

- EBTs demonstrate that energy-based formulations can scale to large Transformer architectures, improving both training efficiency and inference-time reasoning.

- Iterative energy minimization provides a conceptual foundation for adaptive, step-by-step reasoning, aligning with human-like problem-solving.

Together, they suggest a promising direction: reframing inference as optimization, where computation is dynamically allocated based on task difficulty. The presentation slides are shared below.

Presentation Slides: Link to slides